k0s is an open-source single binary Kubernetes distribution for building Kubernetes clusters that has zero dependence on the host OS. k0s is used for deployment in IoT gateways, Edge, Bare metal deployments as well as cloud environments.

Kubernetes is an open-source orchestrator for deploying containerized applications. It was developed by Google for deploying scalable, reliable systems in containers via application-oriented APIs.

k0s key features

k0s has the following key features:

- It is a single binary hence very lightweight.

- It can be installed as a single node, multi node, docker etc.

- It Supports x86-64, ARM64 and ARMv7 architectures.

- It is very modest requiring very minimal system resources (1 vCPU, 1 GB RAM)

- It is very scalable from a single node to high available clusters.

- It Supports etcd, MySQL, PostgreSQL and SQLite.

- It has an isolated control plane for deployment purposes.

- It supports custom CNI and CRI plugins.

- It has built in security features e.g network policies

See a list of all features here

why k0s?

K0s has its name as such because :

- Zero friction : It is very fast to install and run a full conformant Kubernetes distribution in very few minutes. This makes it very easy for non-developers to get started very easily.

- Zero Deps : k0s doesnt depend on any host OS dependencies besides the host OS kernel. Works with any operating system without additional software packages or configuration.

- Zero cost : K0s is completely free for personal and commercial use. Its source code is freely available on Github under Apache 2 licence.

System requirements

- Hardware : 2 CPU cores, 4GB memory.

- Hard-disk : SSD highly recommended, 4GB required.

- Host Operating System : Linux Kernel version 3.10 and above, Windows server 2019.

- Architecture : x86-64 , ARM64, ARMv7

- A user with sudo privileges.

- Debian Linux system

With the brief preamble, we now Deploy Kubernetes Cluster on Debian using k0s.

In the following section, we will install k0s on Debian as the Master node (single node) and then add worker nodes to the Kubernetes cluster and do a simple deployment to test the success of our installation.

Please follow along as you try the same steps on your Debian machine

Step 1: Update your system

Update your system packages, run:

sudo apt update

sudo apt upgrade -y

sudo systemctl reboot Once your system reboots, check the architecture of your system:

$ uname -iYour architecture should meet the requirements.

Step 2: Download k0s kubernetes binary

The k0s binary file is a single binary file that is bundled with all required dependencies for building a Kubernetes cluster.

Run the command below to download the k0s binary

sudo apt install curl

curl -sSLf https://get.k0s.sh | sudo shYour sample output would look like this.

Downloading k0s from URL: https://github.com/k0sproject/k0s/releases/download/v1.31.1+k0s.1/k0s-v1.31.1+k0s.1-amd64

k0s is now executable in /usr/local/binFrom the output, our binary file download path is /usr/local/bin

To confirm the k0s binary path:

$ which k0s

/usr/local/bin/k0sConfirm that the path /usr/local/bin is a binary file

echo "export PATH=\$PATH:/usr/local/bin" | sudo tee -a /etc/profile

source /etc/profileEcho PATH to ascertain its been declared.

$ echo $PATH

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/usr/local/binStep 3: Bootstrap a controller node

To install a single node k0s that has a controller and worker functions:

# k0s install controller --enable-workerThis will install the controller and worker functions in their default values as shown on the output.

INFO[2024-11-07 22:36:55] no config file given, using defaults

INFO[2024-11-07 22:36:55] creating user: etcd

INFO[2024-11-07 22:36:55] creating user: kube-apiserver

INFO[2024-11-07 22:36:55] creating user: konnectivity-server

INFO[2024-11-07 22:36:55] creating user: kube-scheduler

INFO[2024-11-07 22:36:56] Installing k0s service Once the installation completes, start the k0s service.

# k0s start

# systemctl start k0scontrollerEnable k0s controller systems service to start on boot.

# systemctl enable k0scontrollerCheck k0s controller status:

# k0s status

Version: v1.31.1+k0s.1

Process ID: 90113

Role: controller

Workloads: trueSystemd system status:

# systemctl status k0scontroller

● k0scontroller.service - k0s - Zero Friction Kubernetes

Loaded: loaded (/etc/systemd/system/k0scontroller.service; enabled; vendor p>

Active: active (running) since Sun 2024-11-07 22:41:15 EAT; 1min 44s ago

Docs: https://docs.k0sproject.io

Main PID: 90113 (k0s)

Tasks: 146

Memory: 1.5G

CGroup: /system.slice/k0scontroller.service

├─88330 /var/lib/k0s/bin/containerd --root=/var/lib/k0s/containerd ->

├─90113 /usr/local/bin/k0s controller --enable-worker=true

├─90120 /var/lib/k0s/bin/etcd --peer-client-cert-auth=true --name=ro>

├─90136 /var/lib/k0s/bin/kube-apiserver --api-audiences=https://kube>

├─90147 /var/lib/k0s/bin/konnectivity-server --logtostderr=true --au>

├─90154 /var/lib/k0s/bin/kube-scheduler --leader-elect=true --profil>

├─90162 /var/lib/k0s/bin/kube-controller-manager --profiling=false ->

├─90170 /usr/local/bin/k0s api --config= --data-dir=/var/lib/k0s

├─90186 /var/lib/k0s/bin/containerd --root=/var/lib/k0s/containerd ->

├─90387 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io ->

├─90464 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io ->

├─90597 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io ->

├─90643 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io ->

└─90644 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io ->Once k0s is successfully installed, you can access the cluster using the kubectl command:

# k0s kubectl <COMMAND>For example:

# k0s kubectl get nodes

NAME STATUS ROLES AGE VERSION

debian-linux Ready <none> 7m9s v1.31.1+k0s

# k0s kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5ccbdcc4c4-bptmg 1/1 Running 0 3m34s

kube-system konnectivity-agent-dqpnk 1/1 Running 0 3m26s

kube-system kube-proxy-pxhkj 1/1 Running 0 3m34s

kube-system kube-router-fjqd4 1/1 Running 0 3m36s

kube-system metrics-server-6bd95db5f4-wglkt 1/1 Running 0 3m34s

# k0s kubectl get namespaces

# k0s kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-5ccbdcc4c4-kcx8p 1/1 Running 0 4m46s

kube-system konnectivity-agent-kl6pm 1/1 Running 0 4m40s

kube-system kube-proxy-cq8c6 1/1 Running 0 4m41s

kube-system kube-router-ps2t7 1/1 Running 0 4m41s

kube-system metrics-server-6bd95db5f4-5zx4n 1/1 Running 0 4m46sStep 4: Add worker nodes to k0s cluster

To add a worker node to the cluster, k0s must first of all be installed in the worker node. In this guide, I will use my ubuntu server as my worker node.

Install k0s binary file :

$ curl -sSLf https://get.k0s.sh | sudo sh

Downloading k0s from URL: https://github.com/k0sproject/k0s/releases/download/v1.31.1+k0s.1/k0s-v1.31.1+k0s.1-amd64

k0s is now executable in /usr/local/binExport the binary path:

echo "export PATH=\$PATH:/usr/local/bin" | sudo tee -a /etc/profile

source /etc/profileThen allow the ports through the firewall:

sudo firewall-cmd --zone=trusted --add-interface=cni0 --permanent

sudo firewall-cmd --add-port=8090/tcp --permanent

sudo firewall-cmd --add-port=10250/tcp --permanent

sudo firewall-cmd --add-port=10255/tcp --permanent

sudo firewall-cmd --add-port=8472/udp --permanent

sudo firewall-cmd --reloadNext, you need to create a join token that the worker node will use to join the cluster. This token is generated from the control node.

Issue the command below to generate the token.

k0s token create --role=workerThe resulting output is a very long token string. This token will be used by the worker node to join the cluster.

On the worker node, issue the command below.

k0s worker <login-token> The command will look like this:

# k0s worker H4sIAAAAAAAC/2yV0Y7iPtLF7/speIGev51Aa0D6Ljpghw7EtB2XTXwX4nQH4oQQAgQ+7buvhpmRdqW9K1cd/Y5lWXVesnaviu68Pzaz0RYou will have a sample output as shown below.

WARN[2024-11-07 22:56:25] failed to load nf_conntrack kernel module: /usr/sbin/modprobe nf_conntrack

INFO[2024-11-07 22:56:25] initializing ContainerD

INFO[2024-11-07 22:56:25] initializing OCIBundleReconciler

INFO[2024-11-07 22:56:25] initializing Kubelet

INFO[2024-11-07 22:56:25] initializing Status

INFO[2024-11-07 22:56:25] Staging /var/lib/k0s/bin/kubelet

INFO[2024-11-07 22:56:25] Listening address /run/k0s/status.sock component=status

INFO[2024-11-07 22:56:25] Staging /var/lib/k0s/bin/containerd

INFO[2024-11-07 22:56:25] Staging /var/lib/k0s/bin/containerd-shim

INFO[2024-11-07 22:56:25] Staging /var/lib/k0s/bin/runc

INFO[2024-11-07 22:56:25] Staging /var/lib/k0s/bin/containerd-shim-runc-v1

INFO[2024-11-07 22:56:25] Staging /var/lib/k0s/bin/containerd-shim-runc-v2

INFO[2024-11-07 22:56:25] Staging /var/lib/k0s/bin/xtables-legacy-multi

WARN[2024-11-07 22:56:25] failed to load nf_conntrack kernel module: /usr/sbin/modprobe nf_conntrack

INFO[2024-11-07 22:56:25] starting ContainerD

INFO[2024-11-07 22:56:25] Starting containerD

INFO[2024-11-07 22:56:25] Starting to supervise component=containerd

INFO[2024-11-07 22:56:25] Started successfully, go nuts component=containerd

INFO[2024-11-07 22:56:25] time="2021-11-21T22:56:25.722770670+03:00" level=info msg="starting containerd" revision=8686ededfc90076914c5238eb96c883ea093a8ba version=v2.0.0 component=containerdThe process takes some time as containerD components are initiated.

You can now list your nodes on the control node:

# k0s kubectl get nodes

NAME STATUS ROLES AGE VERSION

debian-linux Ready <none> 17m v1.31.1+k0s

ubuntu-server Ready <none> 2m58s v1.31.1+k0sStep 5: Add controllers to the cluster

To add a controller node to a cluster, you carry similar steps to those of adding a worker node. I will add Fedora server 192.168.201.3 as a control node.

Download the binary file

curl -sSLf https://get.k0s.sh | sudo shCommand output:

$ curl -sSLf https://get.k0s.sh | sudo sh

Downloading k0s from URL: https://github.com/k0sproject/k0s/releases/download/v1.31.1+k0s.1/k0s-v1.31.1+k0s.1-amd64

k0s is now executable in /usr/local/binExport the binary file :

echo "export PATH=\$PATH:/usr/local/bin" | sudo tee -a /etc/profile

source /etc/profileThe next step is to generate a token from the existing k0s control node:

Run the command :

k0s token create --role=controllerThe token will be generated to use to add a control node.

k0s controller "<token>"It will be like this:

# k0s controller H4sIAAAAAAAC/3RVwY7qOhbc91fwA32fnUDrBWkWHbBDB2Lajo9NvAtxLoE4IR3SkGY0/z5q7r3SzOLtjk+VqizLqnrKu6Mq+8vx3M4nV/xUuM/LUSample output:

INFO[2024-11-07 23:04:39] no config file given, using defaults

INFO[2024-11-07 23:04:39] using api address: 192.168.201.3

INFO[2024-11-07 23:04:39] using listen port: 6443

INFO[2024-11-07 23:04:39] using sans: [192.168.201.3]

INFO[2024-11-07 23:04:39] DNS address: 10.96.0.10

INFO[2024-11-07 23:04:39] Using storage backend etcd

INFO[2024-11-07 23:04:39] initializing Certificates

2024/11/07 23:04:39 [INFO] generate received request

2024/11/07 23:04:39 [INFO] received CSRCheck the status of the new controller node:

# k0s status

Version: v1.31.1+k0s.1

Process ID: 76539

Parent Process ID: 1

Role: controller

Init System: linux-systemd

Service file: /etc/systemd/system/k0scontroller.serviceThe status report shows that our new controller node has been admitted to the cluster. This controller node won’t be listed when we try to analyze our nodes.

Step 6: Managing k0s cluster remotely.

K0s can also be managed remotely via native kubectl. K0s stores the KUBECONFIG file in /var/lib/k0s/pki/admin.conf. The file can be copied and used to access your cluster remotely.

The contents of this file is certificate-authority-data for server and client-certificate-data, client-key-data: which are long certificates.

Copy the file in your home directory or your preferred location.

sudo cp /var/lib/k0s/pki/admin.conf ~/k0s.conf

sudo chown $USER ~/k0s.confThen download your copied file k0s.conf from your controller node to your remote server.

As shown in the syntax:

scp <username>@<SERVER_IP>:~/k0s.conf .For example :

scp [email protected]:~/k0s.conf .Sample output:

# scp [email protected]:~/k0s.conf .

[email protected]'s password:

k0s.conf 100% 5687 5.1MB/s Modify the host details in k0s.conf file from localhost to the IP address of the controller node.

Then export the config file.

export KUBECONFIG=k0s.confThis way, you are able to remotely manage and configure k0s.

Step 7: Deploy Application on k0s Kubernetes

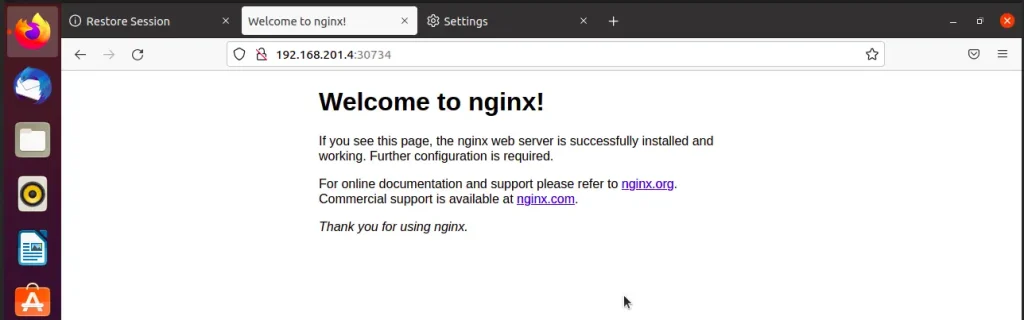

Let’s test our deployment using Nginx web server to see if we are successful.

k0s kubectl apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 4

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

EOFSample output:

deployment.apps/nginx-deployment createdCheck pod status

# k0s kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-585449566-46c8g 1/1 Running 0 2m6s

nginx-deployment-585449566-552hk 1/1 Running 0 2m6s

nginx-deployment-585449566-b79jj 1/1 Running 0 2m6s

nginx-deployment-585449566-rl6v2 1/1 Running 0 2m6sFour replicas has been created as specified in the configuration file.

Expose the port that the pods are running:

# k0s kubectl expose deployment nginx-deployment --type=NodePort --port=80

service/nginx-deployment exposedCheck the ports through which the services were exposed.

# k0s kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 92m

nginx-deployment NodePort 10.96.184.177 <none> 80:30734/TCP 2m4sFrom the output, our Nginx service has been exposed through NodePort on port 30734. We can access the application through the browser on the worker node through the port.

My worker node as stated above is 192.168.201.4. On the browser URL http://192.168.201.4:30734

If you are successful :

Congratulations, you have followed along well.

Step 8: Uninstalling k0s Kubernetes Cluster

To uninstall k0s kubernetes cluster:

Stop k0s service

# k0s stopThen remove k0s setup

# k0s resetSample output :

# k0s reset

INFO[2024-11-07 21:22:05] * containers steps

INFO[2024-11-07 21:22:10] successfully removed k0s containers!

INFO[2024-11-07 21:22:10] no config file given, using defaults

INFO[2024-11-07 21:22:10] * remove k0s users step:

INFO[2024-11-07 21:22:10] no config file given, using defaults

INFO[2024-11-07 21:22:10] * uninstall service step

INFO[2024-11-07 21:22:10] Uninstalling the k0s service

INFO[2024-11-07 21:22:11] * remove directories step

INFO[2024-11-07 21:22:11] * CNI leftovers cleanup step

INFO k0s cleanup operations done. To ensure a full reset, a node reboot is recommended. Then reboot.

# systemctl rebootNext reading: