Apache Spark is an open-source simple, fast, scalable, and integrated multilingual integrated analytics engine. It is used for large-scale data processing, data engineering, data science, and machine learning on single-node machines or clusters. It offers high-level APIs for Java, Scala, Python, and R, and an optimized engine that supports common execution graphs. It also includes Spark SQL for SQL and structured data processing, Pandas API on Spark for Pandas workloads, MLlib for machine learning, GraphX for graph processing, and Structured Streaming for incremental computation and stream processing.

Apache Spark was developed and released by Databricks, Apache Software Foundation, and Holden Karau in 2014 and is licensed under Apache License 2.0. It’s programmed in Python, Scala, Java, and R.

As of this writing, the latest release is version 3.3.1. It is available as a Binary/library or as a source file. Spark uses Hadoop’s client libraries for HDFS and YARN. Scala and Java developers can use Maven coordinates to include Spark in their projects, and Python developers can install Spark from PyPI. Spark runs on both Windows and UNIX-like systems, on macOS and platforms that support JVMs on x86_64 and ARM64.

To successfully install Apache Spark on your system, you must install JAVA in your system’s PATH or the JAVA_HOME environment variable pointing appropriately to your Java installation. Spark works with Java 8/11/17/21, Scala 2.12/2.13, Python 3.7+, and R 3.5+. Python 3.9 is not supported.

Key features

Apache Spark has key features:

- Batch/streaming data – Real-time batching / streaming of data is enhanced by Python, SQL, Scala, Java, or R.

- SQL analytics – Run fast distributed ANSI SQL queries for dashboards and ad-hoc reports. Apache Spark runs faster than most data warehouses.

- Data science at scale – Perform exploratory data analysis (EDA) on petabyte-scale data without resorting to downsampling.

- Machine learning – Train machine learning algorithms on your laptop and use the same code to scale to fault-tolerant clusters of thousands of machines.

- Lightweight – It is a light unified analytics engine that is used for large-scale data processing.

- Apache Spark integrates with a majority of frameworks and helps them to scale to multiple machines. e.g Power BI, tableau, etc

- supports several storage and infrastructure e.g elasticsearch, MongoDB, Kubernetes, Kafka, Cassandra, SQL Server, etc.

- Spark SQL works on structured tables and unstructured data such as JSON or images.

- Spark SQL adapts the execution plan at runtime e.g automatically setting the number of reducers and join algorithms.

- It has hundreds of community contributors across the globe.

- Spark is easy to use – No need to worry about the cluster of computers you are working on. You can simply use a single machine.

- Spark has APIs for both Scala and Python. Simply choose your preferred programming language.

Install and Configure Apache Spark on Oracle Linux 9/CentOS 9

To install Apache Spark, your system should meet the following minimum requirements:

- Minimum memory of 8GB of RAM

- Java 8 or above.

- At least 20 GB of free space

- Stable internet connection.

- An account with sudo privileges.

Before the installation process, update your system package repository.

sudo dnf update -yBegin the installation process.

Step 1: Install Java

I will install the latest JAVA version, i.e JAVA 21.

Install Java using yum / dnf

Run the commands below to install Java:

sudo yum install -y java-21-openjdk java-21-openjdk-develManual installation of Java

Install required packages:

sudo dnf -y install wget curlNavigate to the official JAVA Downloads page for the latest release. Select the x64 RPM Package by copying the link address.

Download the package as shown below.

sudo wget https://download.oracle.com/java/21/latest/jdk-21_linux-x64_bin.rpmOnce the RPM file is downloaded, install Java 17 in your system by the following command.

sudo rpm -Uvh jdk-21_linux-x64_bin.rpmInspect the installed version of Java.

$ java -version

openjdk version "21.0.5" 2024-10-15 LTS

OpenJDK Runtime Environment (Red_Hat-21.0.5.0.11-1.0.1) (build 21.0.5+11-LTS)

OpenJDK 64-Bit Server VM (Red_Hat-21.0.5.0.11-1.0.1) (build 21.0.5+11-LTS, mixed mode, sharing)Set Java Environment variables

sudo tee /etc/profile.d/java21.sh <<EOF

export JAVA_HOME=\$(dirname \$(dirname \$(readlink \$(readlink \$(which javac)))))

export PATH=\$PATH:\$JAVA_HOME/bin

export CLASSPATH=.:\$JAVA_HOME/jre/lib:\$JAVA_HOME/lib:\$JAVA_HOME/lib/tools.jar

EOFActivate the Java environment:

source /etc/profile.d/java21.shExamine the Java variables by running the commands below.

$ echo $JAVA_HOME

/usr/lib/jvm/java-21-openjdk-21.0.5.0.11-2.0.1.el9.x86_64

$ echo $PATH

/root/.local/bin:/root/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/lib/jvm/java-21-openjdk-21.0.5.0.11-2.0.1.el9.x86_64/bin

$ echo $CLASSPATH

.:/usr/lib/jvm/java-21-openjdk-21.0.5.0.11-2.0.1.el9.x86_64/jre/lib:/usr/lib/jvm/java-21-openjdk-21.0.5.0.11-2.0.1.el9.x86_64/lib:/usr/lib/jvm/java-21-openjdk-21.0.5.0.11-2.0.1.el9.x86_64/lib/tools.jarStep 2: Download and install Scala

Download the latest version of Scala by visiting the SCala Downloads page and copying the installation script.

Run the following command in your terminal:

sudo dnf install -y wget curl

curl -fL https://github.com/coursier/coursier/releases/latest/download/cs-x86_64-pc-linux.gz | gzip -d > cs && chmod +x cs && ./cs setupSet Scala environment variables:

sudo tee -a ~/.bashrc <<EOF

export SCALA_HOME=~/.local/share/coursier

export PATH=\$PATH:\$SCALA_HOME/bin

EOFSource the file to start using without logging:

source ~/.bashrcCheck the installed Scala version:

$ scala -version

Scala code runner version: 1.5.4

Scala version (default): 3.6.3Scala latest version has been installed.

Step 3: Downloading & Installing Apache Spark

The next step is to Download and install Apache Spark in your system. Download the latest version of Spark on the official website. The latest release is version 3.5.4. For the package, choose ‘Pre-built for Apache Hadoop. The Apache Spark repositories do not default in the Oracle Linux upstream repositories. you have to download it and do a manual installation. I have moved my download to my home directory.

cd ~/

sudo wget https://dlcdn.apache.org/spark/spark-3.5.4/spark-3.5.4-bin-hadoop3.tgzExtract the Spark tarball.

tar xvf spark-3.5.4-bin-hadoop3.tgzMove the Spark software files to the respective directory (/usr/local/spark).

sudo mv spark-3.5.4-bin-hadoop3 /usr/local/sparkSet up the environment for Spark.

sudo vim ~/.bashrc

#add this line

export PATH=$PATH:/usr/local/spark/binSource the ~/.bashrc file.

source ~/.bashrcStep 5: Verifying the Spark Installation

To verify Spark installation, run the command below.

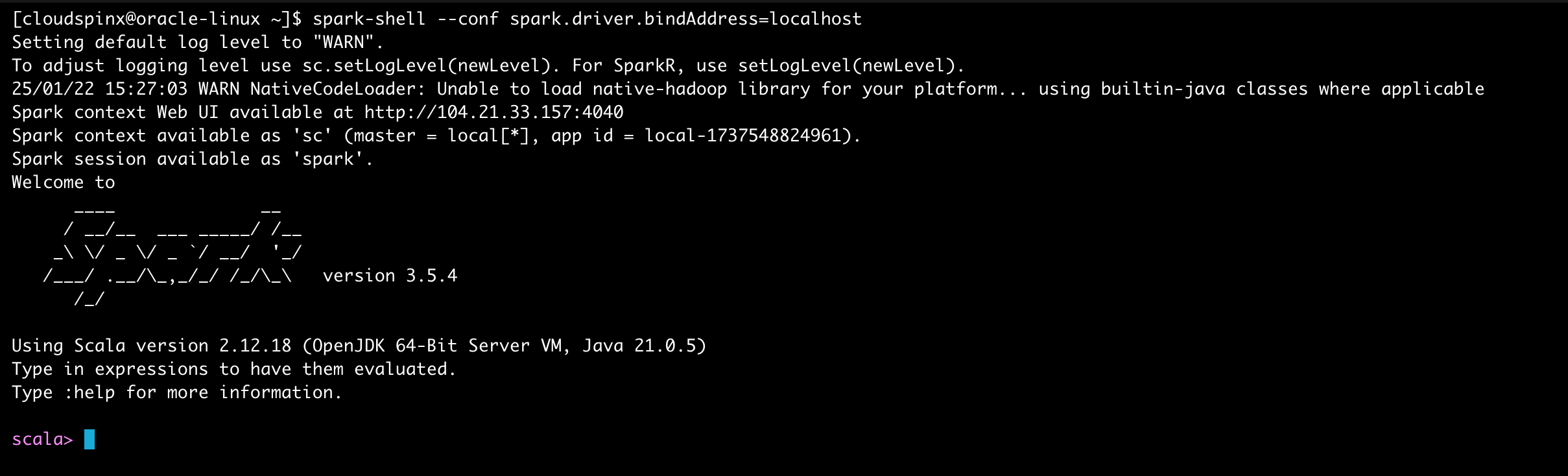

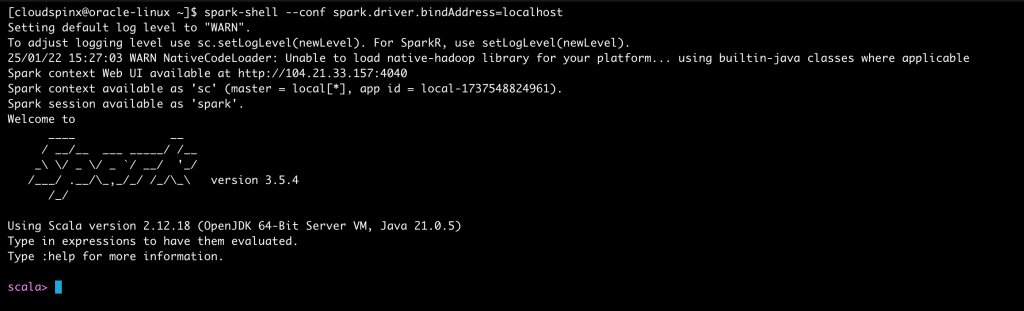

spark-shell --conf spark.driver.bindAddress=localhostThis will give the following output:

Now we have successfully installed spark on Oracle Linux System.

To check the version from the Spark shell, issue the command:

scala> sc.version

res0: String = 3.5.4

scala> spark.version

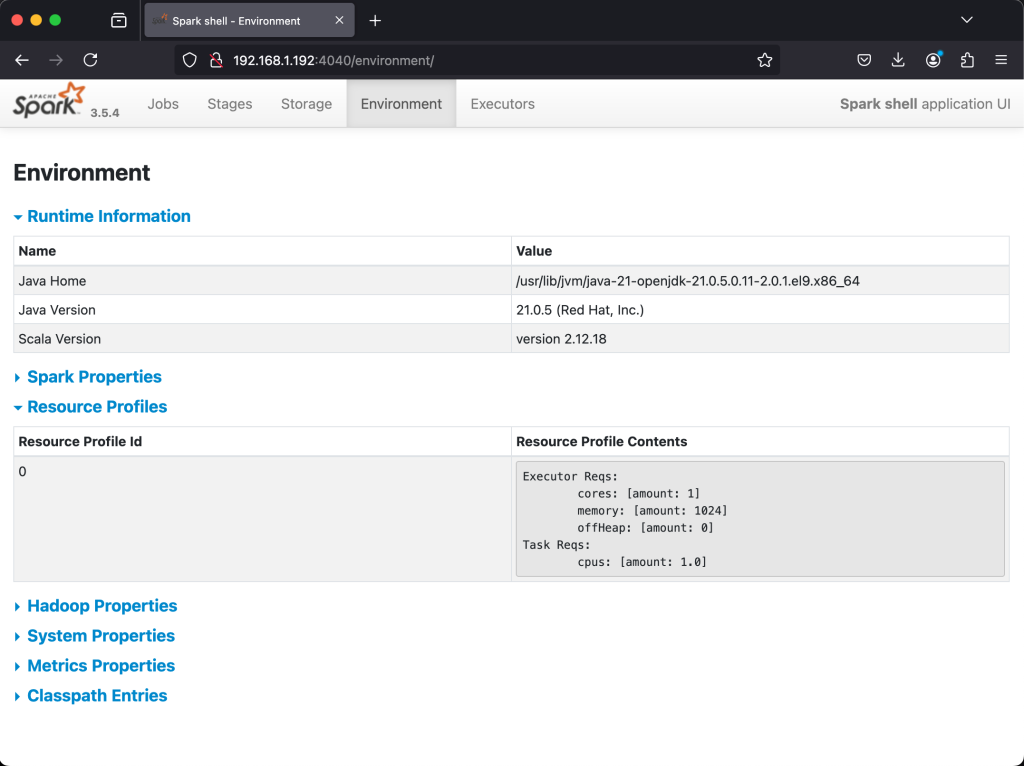

res1: String = 3.5.4Spark context Web UI available at http://<server_ip_address>:4040.

To access the Web UI, allow port 4040 through the firewall.

sudo firewall-cmd --zone=public --add-port=4040/tcp --permanent

sudo firewall-cmd --reloadNow access the Web UI on your browser:

Step 6: Create a simple RDD

For demonstration, I will create a simple RDD and Dataframe. RDD can be created in 3 ways.

Define a list then parallelize it to create RDD.

scala> val nums = Array(1,2,3,5,6)

nums: Array[Int] = Array(1, 2, 3, 5, 6)

scala> val rdd = sc.parallelize(nums)

rdd: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:24Create a Data frame from RDD.

scala> import spark.implicits._

import spark.implicits._

scala> val df = rdd.toDF("num")

df: org.apache.spark.sql.DataFrame = [num: int]To display the data in Dataframe:

scala> df.show()

+---+

|num|

+---+

| 1|

| 2|

| 3|

| 5|

| 6|

+---+Conclusion.

That marks the end of our guide today. We have covered the basic installation and looked at the Apache Spark features. In the coming guides, we will take a deep dive. In the meantime, please visit Apache Spark Documentation for more insights. I hope your installation was successful.

Read more: