Elasticsearch is an open source full text search and analytics engine tool used in storing, searching and analysing big volumes of data real time. It supports RESTful operations i.e you can use HTTP methods in combination with a HTTP URI to manipulate data. It is built on Apache Lucene licensed under Apache 2 License. Elasticsearch key features are, log analytics, search engine, full-text search, security intelligence e.t.c

In this article, I will demonstrate how to setup three node Elasticsearch cluster on Rocky / AlmaLinux 8 Using Ansible. This tutorial will help Linux users to install and configure a highly available multi-node Elasticsearch cluster.

With 3 nodes, we will have the following:

- Master node – Used for cluster-wide operations such as management and allocation of data shards to storage to the data nodes

- Data node – They hold the actual data shards of the indexed data. They perform more operations such as handling CRUID and aggregation operations thus consuming more memory and I/O.

In this tutorial, we will use 1 Master node and 2 Data nodes.

Step 1 – Prepare the servers

Before we begin ensure:

- You have 3 servers and a client machine installed and updated.

| Server Host_Name | Host_IP | Server Role |

| 1. Client machine | 192.168.1.15 | Ansible |

| 2. master | 172.16.120.129 | Master Node |

| 3. data-01 | 172.16.120.130 | Data Node 1 |

| 4. data-02 | 172.16.120.131 | Data Node 2 |

- A user with sudo privileges or root.

Step 2 – Install Ansible in local system

In this tutorial, we will be using Ansible to setup Elasticsearch cluster. Install Ansible on the client machine for easy administration.

sudo yum -y install epel-release

sudo yum install ansible -yConfirm Ansible installation:

$ ansible --version

ansible [core 2.16.3]

config file = /etc/ansible/ansible.cfg

configured module search path = ['/root/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python3.12/site-packages/ansible

ansible collection location = /root/.ansible/collections:/usr/share/ansible/collections

executable location = /usr/bin/ansible

python version = 3.12.3 (main, Jul 2 2024, 20:57:30) [GCC 8.5.0 20210514 (Red Hat 8.5.0-22)] (/usr/bin/python3.12)

jinja version = 3.1.2

libyaml = TrueStep 3 – Import Elasticsearch Ansible Role

After a successful Ansible installation, we now import Elasticsearch Ansible role on the Client machine.

See available versions:

ansible-galaxy install elastic.elasticsearchInstall specific version, example:

$ ansible-galaxy install elastic.elasticsearch,v7.17.0

- downloading role 'elasticsearch', owned by elastic

- downloading role from https://github.com/elastic/ansible-elasticsearch/archive/v7.17.0.tar.gz

- extracting elastic.elasticsearch to /root/.ansible/roles/elastic.elasticsearch

- elastic.elasticsearch (v7.17.0) was installed successfullyHere 7.17.0 was the latest release version when I was doing this tutorial. You can check the latest available version of Elasticsearch form the release page.

Confirm the added role at ~/.ansible/roles directory.

$ ls ~/.ansible/roles

elastic.elasticsearchNext we need to configure ssh with Elasticsearch cluster hosts as below:

vim ~/.ssh/configEdit your configuration to resemble this:

# Elasticsearch master nodes

Host master

Hostname 172.16.120.129

User rocky

# Elasticsearch worker nodes

Host data-01

Hostname 172.16.120.130

User rocky

Host data-02

Hostname 172.16.120.131

User rockyAslo add ssh keys to all the machines.

Generate ssh keys for the 3 nodes on your local machine replacing the username@remotehost with your own

###For the Master nodes ###

ssh-copy-id [email protected]

### Data nodes ###

ssh-copy-id [email protected]

ssh-copy-id [email protected]Confirm if the keys were successfully added, ssh without password.

The master node:

$ ssh [email protected]

Last login: Sun Jul 18 08:11:53 2021 from 192.168.1.15

[rocky@master ~]$ incase, your private key has a passphrase,save it to avoid prompt.

$ eval `ssh-agent -s` && ssh-add

Agent pid 2862948

Identity added: /home/ethicalhacker/.ssh/id_rsa (/home/ethicalhacker/.ssh/id_rsa)Step 4 – Run Elasticsearch Playbook

With all the above configurations set, we now want to create a playook and run it.

vim elk.ymlEdit your content as below:

- hosts: el-master-nodes

roles:

- role: elastic.elasticsearch

vars:

es_enable_xpack: false

es_data_dirs:

- "/data/elasticsearch/data"

es_log_dir: "/data/elasticsearch/logs"

es_java_install: true

es_heap_size: "1g"

es_config:

cluster.name: "el-cluster"

cluster.initial_master_nodes: "172.16.120.129:9300"

discovery.seed_hosts: "172.16.120.129:9300"

http.port: 9200

node.data: false

ndataode.master: true

bootstrap.memory_lock: false

network.host: '0.0.0.0'

es_plugins:

- plugin: ingest-attachment

- hosts: el-data-nodes

roles:

- role: elastic.elasticsearch

vars:

es_enable_xpack: false

es_data_dirs:

- "/data/elasticsearch/data"

es_log_dir: "/data/elasticsearch/logs"

es_java_install: true

es_config:

cluster.name: "el-cluster"

cluster.initial_master_nodes: "172.16.120.130:9300,172.16.120.131:9300"

discovery.seed_hosts: "172.16.120.130:9300,172.16.120.131:9300"

http.port: 9200

node.data: true

node.master: false

bootstrap.memory_lock: false

network.host: '0.0.0.0'

es_plugins:

- plugin: ingest-attachmentIn the above configuration,

- master node has node.master as true and node.data as false.

- data nodes have node.master as false and node.data as true

- For scalability, the data shard is stored at /data/elasticsearch/data

- Logs are stored in /data/elasticsearch/logs

With the above configuration set, we proceed and create an Inventory file as below:

$ vim hosts

[el-master-nodes]

master

[el-data-nodes]

data-01

data-02Now run playbook

ansible-playbook -i hosts elk.ymlSample output:

TASK [elastic.elasticsearch : remove x-pack plugin directory when it isn't a plugin] ***

ok: [master]

ok: [data-02]

ok: [data-01]

TASK [elastic.elasticsearch : Check installed elasticsearch plugins] ***********

ok: [data-02]

ok: [master]

ok: [data-01]

TASK [elastic.elasticsearch : set fact plugins_to_remove to install_plugins.stdout_lines] ***

skipping: [data-01]

skipping: [data-02]

skipping: [master]

.............................

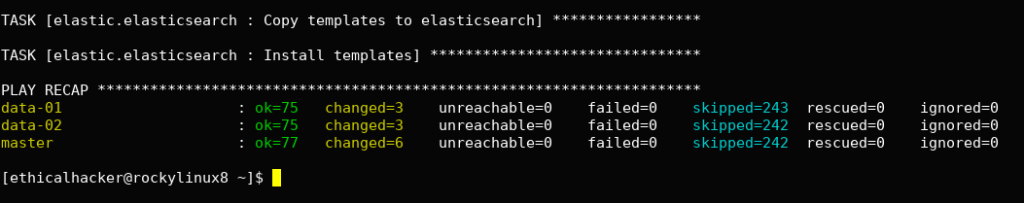

This takes quite sometime, on completion, you should see this:

TASK [elastic.elasticsearch : Copy templates to elasticsearch] *****************

TASK [elastic.elasticsearch : Install templates] *******************************

PLAY RECAP *********************************************************************

data-01 : ok=75 changed=3 unreachable=0 failed=0 skipped=243 rescued=0 ignored=0

data-02 : ok=75 changed=3 unreachable=0 failed=0 skipped=242 rescued=0 ignored=0

master : ok=77 changed=6 unreachable=0 failed=0 skipped=242 rescued=0 ignored=0 In case elasticsearch fails to restart run this on the nodes and increase the timeout time after restart.

sudo vi /usr/lib/systemd/system/elasticsearch.serviceIn the file, add this line TimeoutStartSec=500

# Disable timeout logic and wait until process is stopped

TimeoutStopSec=0

TimeoutStartSec=500Reload the daemon

sudo systemctl daemon-reloadYou will see the new set timeout value:

$ sudo systemctl show elasticsearch | grep ^Timeout

TimeoutStartUSec=8min 20s

TimeoutStopUSec=infinitySample illustration after a successfully deployment from ansible playbook.

Step 5 – Confirm Elasticsearch cluster installation

Login to the master node

ssh masterThen check the health status:

$ curl http://localhost:9200/_cluster/health?pretty

{

"cluster_name" : "el-cluster",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 2,

"active_primary_shards" : 0,

"active_shards" : 0,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}Check master nodes

$ curl -XGET 'http://localhost:9200/_cat/master'

3yCGVlAFSDGguEkO8WqSgw 172.16.120.129 172.16.120.129 masterCheck the data nodes:

$ curl -XGET 'http://localhost:9200/_cat/nodes'

172.16.120.129 17 94 0 0.00 0.10 0.10 imr * master

172.16.120.130 10 47 0 0.00 0.03 0.03 di - data-02

172.16.120.131 6 30 0 0.00 0.02 0.02 di - data-01Explore More with CloudSpinx

Looking to streamline your tech stack? At CloudSpinx, we deliver robust solutions tailored to your needs.

Check out our other articles: