Kubernetes, also called K8s is an open source container orchestration system. With Kubernetes, it makes it easy to create and manage containers by enabling an automatic deployment, scaling and managing containerized applications. Kubernetes uses declarative configurations combined with automation to ensure that it keeps the state of the cluster as desired.

A Kubernetes cluster can be deployed as single-node, for learners and developers, or multi-node production environments. Installation of a Kubernetes cluster was initially a daunting task for newbies who were just seeking to familiarize themselves with the technology. It entailed a multi-node set up, requiring not less than two servers, for controller and worker nodes, and a number of commands to be run on the servers in order to create a cluster. Today, however, we have a number of simple implementations of Kubernetes which can be used to deploy a single-node or multi-node using very few simple commands. These include Minikube, Microk8s and K0s. In this article, we are going to be looking at how to deploy a K0s Kubernetes cluster using k0sctl.

What is K0s and K0sctl?

K0s is so far the simplest way to deploy a Kubernetes cluster, both for personal use and production. With only one command, one is able to create a cluster without necessary needing intense prior knowledge. K0s is distributed as a single binary with no host operating system dependencies except the kernel. It is very lightweight, thus minimal resources required. To learn on how to create a k0s kubernetes cluster, check out Deploy k0s Kubernetes Cluster on Ubuntu.

K0sctl is a command-line tool for bootstrapping and managing k0s cluster. It connects to remote hosts via ssh, deploys k0s and puts the nodes together to create a cluster. It uses a configuration file whose parameters are defined by the user. The cluster can only be changed by altering the parameters in the configuration file.

Installing k0s Kubernetes cluster using k0sctl

To create a multi-node cluster, we need to ensure that we have at least two servers, a controller an a worker. We will install k0sctl tool in our local machines, which should be able to reach the remote hosts through ssh. The remote servers should also have a user with admin privileges and passwordless sudo. K0sctl is available for Linux, Windows and MacOS. You can have as many servers for controller and worker nodes, but for this article, I only have one controller node and one worker node. Here is my set up:

- Local machine (Ubuntu – to install k0sctl)

- 192.168.100.202 (Ubuntu 20.04, to be controller node, hostname: controller, ssh user: root)

- 192.168.100.201 (Ubuntu 20.04, to be worker node, hostname: worker, ssh user: root)

System Requirements for a K0s Cluster

The minimum requirements for a K0s cluster are as below:

| Role | Virtual CPU | RAM | Specific Storage (Only for k0s part) |

| Controller Node | 1 vCPU (2 recommended) | 1GB (2 recommended) | ~0.5GB |

| Worker Node | 1 vCPU (2 recommended) | 0.5GB (1 recommended) | ~1.3GB |

| Controller+worker | 1 vCPU (2 recommended) | 1GB (2 recommended) | ~1.7GB |

The supported host operating systems and architecture are:

- Linux, kernel v3.10 or later

- Windows Server 2019

- x86-64

- ARM64

1. Generate SSH keys and copy public key to server

For seamless access to the remote hosts, use ssh key to to access remote servers. You can generate the keys and copy the public key to the servers using the below commands:

$ ssh-keygenExample:

$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/techviewleo/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/techviewleo/.ssh/id_rsa.

Your public key has been saved in /home/techviewleo/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:RLxJP3x3iwaD5H8hbq6QdtXBDDrmCKZVS6jo7lT0tjY [email protected]

The key's randomart image is:

+---[RSA 3072]----+

| .+. . |

| .o.+.. + |

| ...+ o+O. + |

| ...= ..*o=+o.o .|

|. o o .S.o++oo..|

| .. . . . .+ + . |

|.. E + .o o |

|.. . o o . |

|.. .. |

+----[SHA256]-----+Copy the public key to the remote server where Kubernetes cluster is being deployed.

$ ip ad

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc fq_codel state UP group default qlen 1000

link/ether fa:16:3e:a0:6b:2c brd ff:ff:ff:ff:ff:ff

inet 192.168.100.201/24 brd 192.168.100.255 scope global dynamic noprefixroute eth0

valid_lft 55261sec preferred_lft 55261sec

inet6 fe80::f816:3eff:fea0:6b2c/64 scope link

valid_lft forever preferred_lft foreverCopying the key too root user account:

$ ssh-copy-id root@192.168.100.201

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/techviewleo/.ssh/id_rsa.pub"

The authenticity of host '192.168.100.201 (192.168.100.201)' can't be established.

ECDSA key fingerprint is SHA256:xb/2/RxzVzUKW1jAvQckvzWrNSrwrWkyTzoLqw1LO64.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/techviewleo/.ssh/id_rsa.pub"

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.100.201's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '[email protected]'"

and check to make sure that only the key(s) you wanted were added.Ensure to test ssh connectivity without requiring a password.

$ ssh root@192.168.100.201

Activate the web console with: systemctl enable --now cockpit.socket

Last failed login: Tue Jan 11 19:49:41 UTC 2022 from 192.168.100.201 on ssh:notty

There were 6 failed login attempts since the last successful login.

Last login: Wed Jan 5 12:25:49 2022 from 192.168.100.2

[root@ubuntu-linux-01 ~]#2. Install k0sctl tool on your Linux / macOS

As stated above, k0sctl is available for Linux, Windows and MacOS. Head over to official GitHub release page and download the one appropriate for your OS. If you are running Linux like myself, download as below:

Get the latest software release tag:

VER=$(curl -s https://api.github.com/repos/k0sproject/k0sctl/releases/latest|grep tag_name | cut -d '"' -f 4)

echo $VERDownload k0sctl utility suitable for your CPU architecture:

### Linux 64-bit ###

wget https://github.com/k0sproject/k0sctl/releases/download/${VER}/k0sctl-linux-x64 -O k0sctl

### Linux ARM ###

wget https://github.com/k0sproject/k0sctl/releases/download/${VER}/k0sctl-linux-arm -O k0sctl

### macOS 64-bit ###

wget https://github.com/k0sproject/k0sctl/releases/download/${VER}/k0sctl-darwin-x64 -O k0sctl

### macOS ARM ###

wget https://github.com/k0sproject/k0sctl/releases/download/${VER}/k0sctl-darwin-arm64 -O k0sctlOnce k0sctl is downloaded, make it executable and copy it to a directory available in your $PATH

chmod +x k0sctl

sudo cp k0sctl /usr/local/bin/Check version to confirm k0sctl binary works:

$ k0sctl version

version: v0.19.4

commit: a06d3f6Enable bash completion:

### Bash ###

sudo sh -c 'k0sctl completion >/etc/bash_completion.d/k0sctl'

source /etc/bash_completion.d/k0sctl

### Zsh ###

sudo sh -c 'k0sctl completion > /usr/local/share/zsh/site-functions/_k0sctl'

source /usr/local/share/zsh/site-functions/_k0sctl

### Fish ###

k0sctl completion > ~/.config/fish/completions/k0sctl.fish

source ~/.config/fish/completions/k0sctl.fish3. Create K0sctl configuration file

To create the cluster configuration file, initialize k0sctl to give us the default configuration which we can edit to suit our cluster needs. If you just run the init command, you should see an output as below:

$ k0sctl init

apiVersion: k0sctl.k0sproject.io/v1beta1

kind: Cluster

metadata:

name: k0s-cluster

spec:

hosts:

- ssh:

address: 10.0.0.1

user: root

port: 22

keyPath: null

role: controller

- ssh:

address: 10.0.0.2

user: root

port: 22

keyPath: null

role: worker

k0s:

version: 1.31.2+k0s.0

dynamicConfig: falseNow, we need to save the configuration to a file so that we can edit to suit our cluster.

k0sctl init --k0s > k0sctl.yamlHere is my final configuration having updated the remote hosts IPs (2 nodes)

$ vim k0sctl.yaml

apiVersion: k0sctl.k0sproject.io/v1beta1

kind: Cluster

metadata:

name: k0s-cluster

spec:

hosts:

- ssh:

address: 192.168.100.201

user: root

port: 22

keyPath: /home/$USER/.ssh/id_rsa

role: controller

- ssh:

address: 192.168.100.202

user: root

port: 22

keyPath: /home/$USER/.ssh/id_rsa

role: worker

....Example configuration for single node (controller+worker)

$ vim k0sctl.yaml

apiVersion: k0sctl.k0sproject.io/v1beta1

kind: Cluster

metadata:

name: k0s-cluster

spec:

hosts:

- ssh:

address: 192.168.100.201

user: root

port: 22

keyPath: /home/$USER/.ssh/id_rsa

role: controller+worker

k0s:

version: 1.31.2+k0s.0Creating k0s Cluster

Now that we have the configuration file, we proceed to create the cluster by applying the configuration file as below:

$ k0sctl apply --config k0sctl.yaml

⠀⣿⣿⡇⠀⠀⢀⣴⣾⣿⠟⠁⢸⣿⣿⣿⣿⣿⣿⣿⡿⠛⠁⠀⢸⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀█████████ █████████ ███

⠀⣿⣿⡇⣠⣶⣿⡿⠋⠀⠀⠀⢸⣿⡇⠀⠀⠀⣠⠀⠀⢀⣠⡆⢸⣿⣿⠀⠀⠀⠀⠀⠀⠀⠀⠀⠀███ ███ ███

⠀⣿⣿⣿⣿⣟⠋⠀⠀⠀⠀⠀⢸⣿⡇⠀⢰⣾⣿⠀⠀⣿⣿⡇⢸⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⣿⠀███ ███ ███

⠀⣿⣿⡏⠻⣿⣷⣤⡀⠀⠀⠀⠸⠛⠁⠀⠸⠋⠁⠀⠀⣿⣿⡇⠈⠉⠉⠉⠉⠉⠉⠉⠉⢹⣿⣿⠀███ ███ ███

⠀⣿⣿⡇⠀⠀⠙⢿⣿⣦⣀⠀⠀⠀⣠⣶⣶⣶⣶⣶⣶⣿⣿⡇⢰⣶⣶⣶⣶⣶⣶⣶⣶⣾⣿⣿⠀█████████ ███ ██████████

k0sctl v0.19.4 Copyright 2022, k0sctl authors.

Anonymized telemetry of usage will be sent to the authors.

By continuing to use k0sctl you agree to these terms:

https://k0sproject.io/licenses/eula

INFO ==> Running phase: Connect to hosts

INFO [ssh] 5.75.203.13:22: connected

INFO ==> Running phase: Detect host operating systems

INFO [ssh] 5.75.203.13:22: is running Ubuntu 22.04.1 LTS

INFO ==> Running phase: Acquire exclusive host lock

INFO ==> Running phase: Prepare hosts

INFO ==> Running phase: Gather host facts

INFO [ssh] 5.75.203.13:22: using jammy as hostname

INFO [ssh] 5.75.203.13:22: discovered eth0 as private interface

INFO ==> Running phase: Validate hosts

INFO ==> Running phase: Gather k0s facts

INFO ==> Running phase: Validate facts

INFO ==> Running phase: Download k0s on hosts

INFO [ssh] 5.75.203.13:22: downloading k0s v1.31.2+k0s.0

INFO ==> Running phase: Configure k0s

INFO [ssh] 5.75.203.13:22: validating configuration

INFO [ssh] 5.75.203.13:22: configuration was changed

INFO ==> Running phase: Initialize the k0s cluster

INFO [ssh] 5.75.203.13:22: installing k0s controller

INFO [ssh] 5.75.203.13:22: waiting for the k0s service to start

INFO [ssh] 5.75.203.13:22: waiting for kubernetes api to respond

INFO ==> Running phase: Release exclusive host lock

INFO ==> Running phase: Disconnect from hosts

INFO ==> Finished in 41s

INFO k0s cluster version 1.31.2+k0s.0 is now installed

INFO Tip: To access the cluster you can now fetch the admin kubeconfig using:

INFO k0sctl kubeconfigYour cluster has been successfully created, consisting of one controller node and one worker node.

Accessing the Cluster

To access the cluster, we need kubectl to run Kubernetes commands. Install kubectl using the commands below:

### Install kubectl on Linux ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client

### Install kubectl on macOS Intel ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/amd64/kubectl"

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectl

sudo chown root: /usr/local/bin/kubectl

kubectl version --client

### Install kubectl on macOS Apple Silicon ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/arm64/kubectl"

sudo mv ./kubectl /usr/local/bin/kubectl

sudo chown root: /usr/local/bin/kubectl

kubectl version --clientGet the kubeconfig file using the below command:

k0sctl kubeconfig > kubeconfigRemember to also set KUBECONFIG environment variable to point to kubeconfig.

export KUBECONFIG=$PWD/kubeconfigAlternatively, save a default under ~/.kube directory:

mkdir -p ~/.kube

k0sctl kubeconfig > ~/.kube/configNow you can use kubectl to manage cluster as show in the below examples:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

jammy Ready control-plane 3m47s v1.31.2+k0sAs you can see, the above command only lists the worker nodes. K0s ensures that the controllers and workers are isolated.

$ kubectl cluster-info

Kubernetes control plane is running at https://192.168.100.201:6443

CoreDNS is running at https://192.168.100.201:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.Listing pods in kube-system namespace:

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d9f49dcbb-4c56j 1/1 Running 0 16m

konnectivity-agent-4xc98 1/1 Running 0 16m

kube-proxy-cw9s9 1/1 Running 0 16m

kube-router-pbwdl 1/1 Running 0 16m

metrics-server-74c967d8d4-2dwbv 1/1 Running 0 16mHow to destroy k0s cluster

To do a clean up of your cluster and remove the setup, simply run the below command:

$ k0sctl reset -c k0sctl.yaml

k0sctl v0.19.4 Copyright 2022, k0sctl authors.

Anonymized telemetry of usage will be sent to the authors.

By continuing to use k0sctl you agree to these terms:

https://k0sproject.io/licenses/eula

? Going to reset all of the hosts, which will destroy all configuration and data, Are you sure? Yes

INFO ==> Running phase: Connect to hosts

INFO [ssh] 5.75.203.13:22: connected

INFO ==> Running phase: Detect host operating systems

INFO [ssh] 5.75.203.13:22: is running Ubuntu 22.04.1 LTS

INFO ==> Running phase: Acquire exclusive host lock

INFO ==> Running phase: Prepare hosts

INFO ==> Running phase: Gather k0s facts

INFO [ssh] 5.75.203.13:22: is running k0s controller+worker version 1.31.2+k0s.0

WARN [ssh] 5.75.203.13:22: the controller+worker node will not schedule regular workloads without toleration for node-role.kubernetes.io/master:NoSchedule unless 'noTaints: true' is set

INFO ==> Running phase: Reset controllers

INFO [ssh] 5.75.203.13:22: reset

INFO ==> Running phase: Reset leader

INFO [ssh] 5.75.203.13:22: reset

INFO ==> Running phase: Release exclusive host lock

INFO ==> Running phase: Disconnect from hosts

INFO ==> Finished in 15sFor more management options, use help option

$ k0sctl help

NAME:

k0sctl - k0s cluster management tool

USAGE:

k0sctl [global options] command [command options] [arguments...]

COMMANDS:

version Output k0sctl version

apply Apply a k0sctl configuration

kubeconfig Output the admin kubeconfig of the cluster

init Create a configuration template

reset Remove traces of k0s from all of the hosts

backup Take backup of existing clusters state

help, h Shows a list of commands or help for one command

GLOBAL OPTIONS:

--debug, -d Enable debug logging (default: false) [$DEBUG]

--trace Enable trace logging (default: false) [$TRACE]

--no-redact Do not hide sensitive information in the output (default: false)

--help, -h show help (default: false)More K0sctl File Configurations

The following are various options that you can incorporate the k0sctl config file. The part spec.hosts[*].role can take the following configurations:

- controller – A controller host

- controller+worker – A controller host that will also run workloads

- single – A single-node cluster, only one host is used

- worker – A worker node

You can also download K0s binaries for target host, cache them locally and also upload to the target host. spec.hosts[*]uploadBinary<boolean> (optional)(default: false). When set to false (default), the binaries are not uploaded to the target host.

# cache locally only(default setting)

spec:

hosts:

- ssh:

address: 192.168.100.201

user: root

port: 22

keyPath: /home/techviewleo/.ssh/id_rsa

role: controller

uploadBinary: trueTo set a hostname for the host use the below. If not set, it will automatically pick the hostname reported by the OS

spec.hosts[*].hostname <string> (optional)Pass extra flags to K0s install command with the below configuration

spec.host[*].installFlags <sequence> (optional)To set target environment variables, use spec.hosts[*].environment <mapping> (optional) as shown in the example:

environment:

HTTP_PROXY: 192.168.100.123:443If you will need to upload files to the remote host:

- name: <name-of-file>

src: <file-source-dir>

dstDir: <dest-dir-in-target-host>

perm: <file-permission, e.g 0644)If for some reason you wish to override OS auto-detection on the target host, set OS parameter as shown:

- role: worker

os: debian

ssh:

address: 192.168.100.201For more of these configurations, check out k0sctl github page, https://github.com/k0sproject/k0sctl

Control Plane Configuration Options

Once you create the cluster, a k0s default configuration file is created and used by the cluster. To check this file, access the controller node and run the below command to generate the default k0s config file.

sudo k0s default-config > /etc/k0s/k0s.yamlYou can check the contents of the file using the command:

cat k0s.yaml You can modify the file and install k0s to suit your needs that will be used by the controller. If you change the settings on the controller you can install it with the below command:

sudo k0s install controller -c <path-to-config-file>Note that you can modify the k0s configuration file even when the cluster is running but you need to restart for the changes to take effect.

sudo k0s stop

sudo k0s startWorker Nodes Configurations

Unlike the controller, k0s worker does not take any special yaml configuration. However, we can still configure workers to take some elements. The examples below pass --labels flag.

k0s worker --token-file k0s.token --labels="<label-name>"K0s Configure Cloud Providers

K0s-managed Kubernetes does not include built-in cloud provider and you have to manually configure them to enbale support in the k0s cluster. There are two ways in which you can configure cloud provider for your K0s cluster. These are discussed below:

K0s Cloud Provider

Here, K0s provides its own lightweight cloud provider that we can use to assign a static ExternalIP for exposing worker nodes through static IP assignments. This is accomplished by running a worker or a controller and passing a config for a cloud provider.

#worker

sudo k0s worker --enable-cloud-provider=true

#controller

sudo k0s controller --enable-k0s-cloud-provider=trueAfter that you need to add a static IP to node; both IPv4 and IPv6 are supported.

kubectl annonate node <node> k0sproject.io/node-ip-external=<external IP>Using Built-in Cloud Manifest

A manifest deployer is one of the ways you can run k0s with own preferred extensions. The deployer runs on the controller node to deploy a manifest at runtime and reads all manifests from /var/lib/k0s/manifests by default. Access the controller node and run the below command:

$ ls -l /var/lib/k0s/

total 20

drwxr-xr-x 2 root root 4096 Sad 4 23:42 bin

drwx------ 3 etcd root 4096 Sad 13 13:09 etcd

-rw-r--r-- 1 root root 241 Sad 13 13:09 konnectivity.conf

drwxr-xr-x 14 root root 4096 Sad 4 23:42 manifests

drwxr-x--x 3 root root 4096 Sad 4 23:42 pkiManifest deployer keeps checking the changes made to the file and you don’t have to manually apply the changes. Create directories inside manifests that will have the files to define various deployments of what you need. Let’s look at an example of nginx manifest. In manifests directory, I created another directory called nginx and inside it I have a nginx.yaml with the below configurations.

apiVersion: v1

kind: Namespace

metadata:

name: nginx

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: nginx

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80Once you have the file, new nodes will appear soon after, you don’t have to do anything else. You can check the pods from either node. (If you get an error from the controller or worker nodes, you may have to copy the kubeconfig file to the individual nodes and export. Also if you don’t have kubectl on the worker and controller, you will use ‘k0s kubectl’ as below).

$ sudo k0s kubectl get pods --namespace nginx

NAME READY STATUS RESTARTS AGE

nginx-deployment-585449566-kwv5s 1/1 Running 0 2m40s

nginx-deployment-585449566-vt59c 1/1 Running 0 2m40s

nginx-deployment-585449566-wqhfw 1/1 Running 0 2m40sWith built-in manifest deployer, you can easily deploy a cloud provider as a k0s-managed stack. To use this method, you must ensure that kube-apiserver and kube-controller-manager must not specify the --cloud-provider flag and kubelet must run --cloud-provider=external.Other requirements would include:

- Cloud authentication/authorization

- Kubernetes authorization/authentication

- High availability

Also note that the configurations will depend on the cloud provider you have opted to use. Kubernetes already have some controllers supported while others are not. For the supported ones, you can simply run the controller manager as a DaemonSet in the cluster. You could have a configuration such as /var/lib/k0s/manifests/aws if your cloud provider is AWS and that resemble the one below (Requirements for various cloud providers vary):

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: cloud-controller-manager

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:cloud-controller-manager

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: cloud-controller-manager

namespace: kube-system

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

k8s-app: cloud-controller-manager

name: cloud-controller-manager

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: cloud-controller-manager

template:

metadata:

labels:

k8s-app: cloud-controller-manager

spec:

serviceAccountName: cloud-controller-manager

containers:

- name: cloud-controller-manager

# for in-tree providers we use k8s.gcr.io/cloud-controller-manager

# this can be replaced with any other image for out-of-tree providers

image: k8s.gcr.io/cloud-controller-manager:v1.8.0

command:

- /usr/local/bin/cloud-controller-manager

- --cloud-provider=[YOUR_CLOUD_PROVIDER] # Add your own cloud provider here!

- --leader-elect=true

- --use-service-account-credentials

# these flags will vary for every cloud provider

- --allocate-node-cidrs=true

- --configure-cloud-routes=true

- --cluster-cidr=172.17.0.0/16

tolerations:

# this is required so CCM can bootstrap itself

- key: node.cloudprovider.kubernetes.io/uninitialized

value: "true"

effect: NoSchedule

# this is to have the daemonset runnable on master nodes

# the taint may vary depending on your cluster setup

- key: node-role.kubernetes.io/master

effect: NoSchedule

# this is to restrict CCM to only run on master nodes

# the node selector may vary depending on your cluster setup

nodeSelector:

node-role.kubernetes.io/master: ""Once you have all the required manifests required, K0s will simply handle the deployment for you. The cloud controller manager can implement the following:

- Node controller for updating or deleting Kubernetes nodes using API

- Service controller for loadbalancers on the cloud against the service of type LoadBalancer

- Route controller for setting network routes on the cloud

Install Traefik Ingress Controller in K0S

An ingress controller in containerized environments defines routing rules into Kubernetes environment. It manages access to the cluster through ingress specification. In Kubernetes, there are various ways of exposing applications and these include a ClusterIP,NodePort, LoadBalancer which are collectively known as Kubernetes services and use of an Ingress resource which allows host or URL based HTTP routing. The advantage of an Ingress over the rest is the fact that it can put together routing rules in a single resource to expose many applications.

ClusterIP– Exposes service within clusterNodePort– Exposes service using port assigned to nodeLoadBalancer– Create a loadbalancer provided by a cloud providerIngress– opetares in layer 7 for TLS termination, name-based virtual hosts etc depending on controller type.

An ingress is the API object defining routing rules such as load balancing and ssl termination while an ingress controller is the component use to realize the requests. There are various ingress contrioller strategy approaches, some include ingress controllers such as ingress-nginx, API Gateway deployed such as Traefik or one’s own custom configurations using Nginx or HAProxy.

Installing Traefik and MetalLB

Metallb is a network load balancer implementation that you can use if you are not running on a supported cloud provider. If you do not use any loadbalancer, you will see the status appearing as ‘pending’ all through.

For my installation, I am using helm, a package manager for Kubernetes. First ensure you have helm installed (Note, i am running the commands from k0sctl node).

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3

chmod 700 get_helm.sh

sudo ./get_helm.sh

helm versionOnce helm is installed, add Traefik’s chart repository to Helm and update the chart repository

helm repo add traefik https://helm.traefik.io/traefik

helm repo updateNow deploy Traefik

helm install traefik traefik/traefikNext, proceed to intall metallb with the below commands

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/main/manifests/namespace.yaml

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/main/manifests/metallb.yamlMetallb will remail idle until configured. For my case, i am simply adding the pool of IPs to be used by the metallb but you can do more configurations. I am adding the configuration to ‘manifest’ folder in the controller. Access the controller node and run the below commands:

cd /var/lib/k0s/manifests

sudo mkdir metallb

sudo vim config.yamlAdd the following content to the config.yaml and save

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.100.10-192.168.100.20You will notice that your load balancer will have been assigned an external IP. I have also exposed an Nginx deployment with type LoadBalancer and it has already gotten an external IP in the range defined above.

kubectl create deployment nginx --image nginx --port=80

kubectl expose deployment nginx --type=LoadBalancerCheck the external IP assignment as below:

$ kubectl get all

NAME READY STATUS RESTARTS AGE

pod/nginx-7848d4b86f-jwbq4 1/1 Running 1 (25m ago) 100m

pod/traefik-794f7579b9-p2wt5 1/1 Running 0 22m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25h

service/nginx LoadBalancer 10.96.132.255 192.168.100.10 80:32558/TCP 100m

service/traefik LoadBalancer 10.104.151.125 192.168.100.11 80:31998/TCP,443:31199/TCP 22m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 1/1 1 1 100m

deployment.apps/traefik 1/1 1 1 22m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-7848d4b86f 1 1 1 100m

replicaset.apps/traefik-794f7579b9 1 1 1 22m

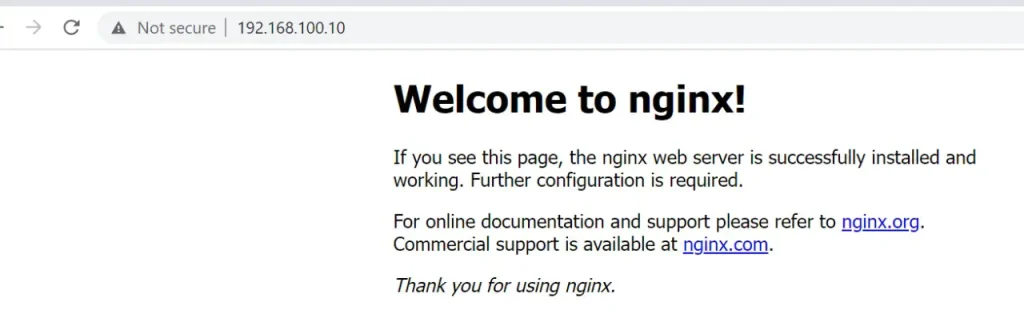

If you access the url http://192.168.100.10 from any device in your local network, you should be able to load nginx default page.

Check services:

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 26h

nginx LoadBalancer 10.96.132.255 192.168.100.10 80:32558/TCP 161m

traefik LoadBalancer 10.104.151.125 192.168.100.11 80:31998/TCP,443:31199/TCP 83mIn this article, we have looked at how to create a k0s kubernetes cluster using k0sctl which is a command line tool for bootstrapping and managing a k0s cluster. We have also seen how to cloud provider as well how useTraefik ingress controller and MetalLB network load balancer to expose the applications in a cluster.