Kubernetes is a container orchestration, runtime platform for creating cloud-native applications. It was developed by Google and it is currently the most active project on Github and the commonly used and feature-rich container orchestration platform. Kubernetes form the foundation of other platforms built on top of it e.g Red Hat OpenShift which provides additional capabilities to Kubernetes.

Containers are the building blocks for Kubernetes-based cloud-native applications. Containers implement the microservices principles since they provide a single unit of functionality, belong to a single team, has an independent release cycle, and provides deployment and runtime isolation. One microservice corresponds to one running instance of container image.

k0s is a Kubernetes distribution configured with all features required to build a Kubernetes cluster by copying and running an executable file on each target host.

k0s key features

- It is available as a single static binary

- Offers a self-hosted control panel.

- Supports a variety of storage backends e.g MySQL , PostgreSQL etc

- Supports custom container runtimes.

- Supports custom Container Network Interface plugins e.g calico.

- Supports Linux and Windows Server 2019.

- Has Built-In Security & Conformance

- Supports x86_64 and arm64.

- Has an elastic control panel.

Setup requirements

- Hardware : 2 CPU cores, 4GB memory.

- Hard-disk : SSD highly recommended, 4GB required.

- Host Operating System : Linux Kernel version 3.10 and above, Windows server 2019.

- Architecture : x86-64 , ARM64, ARMv7

We will now examine how to deploy the Kubernetes cluster on Ubuntu

In the next sections we dive deeper into the installation of k0s and how to bootstrap a single node Kubernetes cluster from it. We will also demonstrate how you can add worker nodes into the cluster and start deployment of your micro-serviced workloads.

The steps are structured in order to ensure no setup failures. Just follow along and by the end you should have a functional Kubernetes Cluster.

Step 1 : Update your system

To update your Ubuntu system, run the commands below:

sudo apt update && sudo apt upgrade -yAfter a successful upgrade on your Ubuntu instances we recommend you perform an upgrade in case of kernel updates:

[ -e /var/run/reboot-required ] && sudo rebootWait for the server to reboot then login and proceed to the next step.

Step 2 : Download k0s binary

k0s is packaged as a single binary to configure all of the features that you’ll need to build a Kubernetes cluster. This ensures ease of use for users are not Linux savvy.

To download k0s binary in your Ubuntu system, run these commands in your terminal.

sudo apt install curl

sudo curl -sSLf https://get.k0s.sh | sudo shSee our command execution sample output:

Downloading k0s from URL: https://github.com/k0sproject/k0s/releases/download/v1.31.1+k0s.0/k0s-v1.31.1+k0s.0-amd64

k0s is now executable in /usr/local/binFrom the output, our binary file is saved on /usr/local/bin . This is the binary path to the file.

$ which k0s

/usr/local/bin/k0sYou can confirm that the path /usr/local/bin is a binary path by this command.

echo "export PATH=\$PATH:/usr/local/bin" | sudo tee -a /etc/profile

source /etc/profileWhen you echo the PATH, you should see that the path is declared as binary.

$ echo $PATH

/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/binStep 3 : Bootstrap Single Kubernetes Controller using k0s

To install a single node k0s that includes the controller and worker functions with their default configs, run the following command.

sudo k0s install controller --enable-workerWait a few moments and then start the Controller service:

sleep 30 && sudo k0s startLet’s also enable k0s controller systemd service:

sudo systemctl enable k0scontrollerConfirm if the controller components are now active:

$ sudo k0s status

Version: v1.31.1+k0s.0

Process ID: 9153

Role: controller

Workloads: trueAlso check k0s systemd service status:

$ sudo systemctl status k0scontroller

● k0scontroller.service - k0s - Zero Friction Kubernetes

Loaded: loaded (/etc/systemd/system/k0scontroller.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2024-11-07 09:18:39 UTC; 1min 18s ago

Docs: https://docs.k0sproject.io

Main PID: 9153 (k0s)

Tasks: 133

Memory: 1.3G

CGroup: /system.slice/k0scontroller.service

├─ 9153 /usr/local/bin/k0s controller --single=true

├─ 9180 /var/lib/k0s/bin/kine --endpoint=sqlite:///var/lib/k0s/db/state.db?more=rwc&_journal=WAL&cache=shared --listen-address=unix:///run/k0s/kine/kine.sock:2379

├─ 9189 /var/lib/k0s/bin/kube-apiserver --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --service-account-jwks-uri=https://kubernetes.default.svc/openid/v1/jwks --prof>

├─ 9337 /var/lib/k0s/bin/kube-scheduler --kubeconfig=/var/lib/k0s/pki/scheduler.conf --v=1 --bind-address=127.0.0.1 --leader-elect=true --profiling=false --authentication-kubeconfig=/v>

├─ 9345 /var/lib/k0s/bin/kube-controller-manager --enable-hostpath-provisioner=true --authentication-kubeconfig=/var/lib/k0s/pki/ccm.conf --client-ca-file=/var/lib/k0s/pki/ca.crt --ser>

├─ 9365 /var/lib/k0s/bin/containerd --root=/var/lib/k0s/containerd --state=/run/k0s/containerd --address=/run/k0s/containerd.sock --log-level=info --config=/etc/k0s/containerd.toml

├─ 9378 /var/lib/k0s/bin/kubelet --container-runtime=remote --runtime-cgroups=/system.slice/containerd.service --root-dir=/var/lib/k0s/kubelet --v=1 --cert-dir=/var/lib/k0s/kubelet/pki>

├─ 9528 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io -id 199156f46291cf340a7f0090ad4c1990c2f618caac42ca2146c60f812a977c0a -address /run/k0s/containerd.sock

├─ 9541 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io -id 70ed01bf1f6cc58d70004838244b8d3a7fe5af16e0cf4e6d8d7b38728abdf031 -address /run/k0s/containerd.sock

├─ 9577 /pause

├─ 9607 /pause

├─ 9645 /usr/local/bin/kube-proxy --config=/var/lib/kube-proxy/config.conf --hostname-override=ubuntu-01

├─ 9943 /usr/local/bin/kube-router --run-router=true --run-firewall=true --run-service-proxy=false --bgp-graceful-restart=true --metrics-port=8080

├─10164 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io -id 2702a2a7c4e67acaeacc8b55199d4f6c9701f4da47a666c42c15cf333a579be0 -address /run/k0s/containerd.sock

├─10165 /var/lib/k0s/bin/containerd-shim-runc-v2 -namespace k8s.io -id a68e824c86b3555201e0a1f0490a31a7238a8a26b69cfdb5d2249e4c3cebdf89 -address /run/k0s/containerd.sock

├─10198 /pause

├─10226 /pause

├─10278 /metrics-server --cert-dir=/tmp --secure-port=443 --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --kubelet-use-node-status-port --metric-resolution=15s

└─10367 /coredns -conf /etc/coredns/Corefile

...Step 4 : Configure kubectl access to k0s Kubernetes Cluster

To access your cluster, execute the command kubectl

$ sudo k0s kubectl <command>After a successful deployment, k0s creates a KUBECONFIG file for you but sudo privileges are required to run it. Since this is an embedded binary it cannot be executed without calling k0s binary:

$ sudo k0s kubectl get nodes

NAME STATUS ROLES AGE VERSION

ubuntu-01 Ready <none> 26m v1.31.1+k0s.0Let’s copy the configuration file for kubectl to our user’s home path.

mkdir ~/.kube

sudo cp /var/lib/k0s/pki/admin.conf ~/.kube/config

sudo chown $USER:$USER ~/.kube/configkubectl is used to deploy your application or check the status of your nodes. We can install kubectl for all users:

### Linux ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

### macOS Intel ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/amd64/kubectl"

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectl

sudo chown root: /usr/local/bin/kubectl

### macOS Apple Silicon ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/arm64/kubectl"

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectl

sudo chown root: /usr/local/bin/kubectlExample :

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ubuntu-01 Ready <none> 11m v1.31.1+k0s.0We can check the status of Pods in all namespaces:

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system kube-proxy-vcm24 1/1 Running 0 12m

kube-system kube-router-b2pbg 1/1 Running 0 12m

kube-system coredns-5ccbdcc4c4-2vkc2 1/1 Running 0 13m

kube-system metrics-server-6bd95db5f4-hhngt 1/1 Running 0 13mSee details on networking and port numbers on k0s networking guide if you have firewall configured in your Ubuntu system.

Step 4 : Create k0s Kubernetes join token for Worker

Token aids in joining workers to a cluster. Tokens embed information between a worker and the k0s controller. Token also allows the node to join the cluster as a worker.

Run the command on the first Control plane node to generate token required when adding new worker nodes into the cluster:

sudo k0s token create --role=workerYou should see long token string printed in your screen. We’ll use this token to join worker nodes to the Kubernetes Cluster:

H4sIAAAAAAAC/2xVzW7jPBK85yn8AjOf/pyFDewhlEU7ckSHFNmUeKNFTWSTkhVZ8Y8W++6LeOYDdoG9NbsKVQTZ6HrS/QHq4Xw4dcvZxX+q3Nd5rIfz8unH7E+9fJrNZrNzPVzqYTlrxrE/L//6y58vfj7Pfwb+889FsHyOovBBq+phPPw6VHqsf+ivsTkNh/H+w+hRL2dvuTe+5X7MhEn5Aa0YpCIXKqUeFuyBeWNs/TQXDLGErKiEXnk4zEWKlOdAJOlaeNldcYjZkbV5IHw6pW01oUtVkFgf09R4jEnRv+39PtUbdeQWMyauW+owMp5JGBhCHd5QjoEJHHExbzJf9dI1WwN9Kdrbseb4UwJDSqgw2zgCCYS86JHxFoJxRFiSNqyrrvVH/xuTffffGBdpVyfqkwLEyptLajGBhBVmhdUe1LxsfZnhvuQFU5mFUAUGa+GjLDErEeJN7EhaHkkiBMQUMFCReLlIE+PhBzf38OX7TYQggZmySE3okxXg6Q2JMjvPiEdvmUvf6GG81CuTqYkEfHrZsmMTkC7ziGs0F3iACUh9BFlxBaxwyth5L4/q02xSvXdNRHBqy2Dh9ussMGu3YvY21VJ1ZX4OM4Ctkk0EL/0uX/tbEiZ3aPsracW1BjxmvipYiMs86HfAkSgnFNRtf6wT/5kFjVfHC5JDqkp4uel2ZBCPxCSNYO2NVjI9xJ1jHNjrbj3a0k8FHBaHuj0FJPy4Um/kUvZK4pMPgLJySkO5Jtd9mNqSuwMpSFPJEe2D7MryhWfa+cY4eNarbKu7VJZhf6vXo0+BevWRQBaMyEglmTtNtU0GNaWnbWDvwqqcgbnxLpn0upxYSxxM7KALRlQHoizUYPKxJx/9RXXsnYdk1JwkRqY36kgPHgzVytwzzDbai+5ZkUyqAEa4S/ONedeOvQqvYRUwJ5N5RKUh1GLEgp5pm6LY+ogkBmUAlm0MpodFKAQg47mYtj0SSdpxp16F9WMqy4iJVFDBkBAfFy5er8xLbiBZonxcUJvmpiOBDq5bIyE3wYhVoXb5Cr1TUFEl1LCLFwgSvKOtGSrZDFpUczOh7xmcmMWIewR9+9NQvVchDrRHKA0Z02A25bXXdeteRZCGyiaTXqW5mJrg++/UmkSqq4J8RV63HptES+/ZGiC3BMnDeCbQzOXm5Q7S32mp4tw1ql73RxGwS2yjK3Psmvuni7QAqgCoE6KVgLCSUFR+Q2lgWu3cM5vImyoYYja5q1b42dRQJn3OO+xkm93pZD7FhgWCv2yNvI1MuI2EdCQrsBUngYjHL92ZXRmQbG8J0dgFeYeijL8OsD5HokCCS0OrxBzkYfFqOPqSIi12GMUG0JG99J0uUh+OLKAhEL42TqxIQy1RrEgiGpoz46oxHTjGyVXbMgIx73YrYlnbX4Udb0Rcp2rjNvsEd9k6uxoshrh7uVaW9WSTxiWn18qqyy4ZVyYwbjuhhiW3eYVJyTpC5doxM1U3Y9mq3qBIYJfk60Ug8UckpE/Umu32YD3g2VZYFWkB3Tv3tn92M+aWflAPckjcOhcEQSIee/mNnv75NJt1uq2XM+udn6pTN9a38XdQ/K5/B8XfqfFgfTe+zo/T17529fhjfzqN53HQ/f+qfQ1D3Y0//lZ6NO2hM8tZfOp+HT6e+qH+VQ91V9Xn5exf/376Vn2Y/xH5P/IP48cVxpOtu+Ws7xfhZ/Dz2I9f9/2n/kcUOnM7ek//CQAA//8kZahUBQcAAA==This however is not a very secure way of doing it. To enhance security, you can set an expiry time for the token as per this command :

sudo k0s token create --role=worker --expiry=100h>k0s-worker-token.jsonStep 5 : Add workers to the k0s Kubernetes cluster

We’ll start with installation of k0s binary then join one or more worker nods to our Kubernetes cluster.

Prepare new worker nodes

These steps are not run on the control node but on the target worker nodes to be joined into the cluster. The steps above for downloading and installing the k0s binary file apply.

sudo curl -sSLf https://get.k0s.sh | sudo shThen export the binary path :

echo "export PATH=\$PATH:/usr/local/bin" | sudo tee -a /etc/profile

source /etc/profileWith k0s now successfully installed on the worker node, we need to authenticate the worker node on the control node.

Save generated token in a file:

$ vim k0s-worker-token.json

#Paste and save your join token hereTo add the worker node to the control node, on the worker node, issue this command.

$ sudo k0s install worker --token-file k0s-worker-token.json

INFO[2023-10-30 09:52:08] Installing k0s serviceThe file token k0s-worker-token.json contains the token generated from the control node in the previous step.

Start k0s service:

sleep 30 && sudo k0s startCheck status of k0s service on worker node being added to the cluster:

$ sudo k0s status

Version: v1.31.1+k0s.0

Process ID: 3571

Role: worker

Workloads: trueCheck new nodes in control plane node

This process takes a while, but once complete, you should have an output as shown below on examining the nodes.

$ sudo k0s kubectl get nodes

NAME STATUS ROLES AGE VERSION

ubuntu-01 Ready <none> 38m v1.31.1+k0s.0

ubuntu-02 Ready <none> 40s v1.31.1+k0s.0To get more details on each node add -o wide option:

$ sudo k0s kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ubuntu-01 Ready <none> 39m v1.31.1+k0s.0 192.168.20.11 <none> Ubuntu 20.04.3 LTS 5.4.0-88-generic containerd://1.5.7

ubuntu-02 Ready <none> 94s v1.31.1+k0s.0 192.168.20.12 <none> Ubuntu 20.04.3 LTS 5.4.0-88-generic containerd://1.5.7From the output, my node from ubuntu-02 has been added successfully to the cluster.

Step 6 : Add Extra Control Plane Nodes to the cluster (Optional)

Generate token from an existing control node with a role of controller:

sudo k0s token create --role=controllerTo save into file use:

sudo k0s token create --role=controller --expiry=100h>k0s-controller-token.jsonPrepare new control plane node by installing k0s

To add controllers to a cluster, you need to carry out the steps above for adding a worker node.

Download k0s binary.

curl -sSLf https://get.k0s.sh | sudo shExport the binary file .

echo "export PATH=\$PATH:/usr/local/bin" | sudo tee -a /etc/profile

source /etc/profileAdd controller node to the cluster

Save the token generated in a file called k0s-controller-token.json

$ vim k0s-controller-token.json

#Paste controller join token hereThen initialize new controller using token saved:

$ sudo k0s install controller --token-file k0s-controller-token.json

INFO[2024-11-07 10:08:51] no config file given, using defaults

INFO[2024-11-07 10:08:51] creating user: etcd

INFO[2024-11-07 10:08:52] creating user: kube-apiserver

INFO[2024-11-07 10:08:52] creating user: konnectivity-server

INFO[2024-11-07 10:08:52] creating user: kube-scheduler

INFO[2024-11-07 10:08:52] Installing k0s serviceFor controller that can run Workloads run:

sudo k0s install controller --enable-worker --token-file k0s-controller-token.jsonStart k0s service on new controller node:

sleep 30 && sudo k0s startCheck status of k0s service on newly added controller node:

$ sudo k0s status

Version: v1.31.1+k0s.0

Process ID: 2358

Role: controller

Workloads: falseCongratulations, a new controller node has been added to the cluster.

Step 7 : Deploy test application on k0s Kubernetes Cluster

We will test our deployment with Nginx server. Copy and paste the script below on your terminal.

sudo k0s kubectl apply -f - <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-server

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

EOFThe command will execute as follows:

deployment.apps/nginx-server createdNext, check pod status.

sudo k0s kubectl get podsThe sample output :

$ sudo k0s kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-server-585449566-vckx6 1/1 Running 0 35s

nginx-server-585449566-jj9zp 1/1 Running 0 35sFrom the output 2 nginx pods are running as this was specified on the config file above, where we defined 2 replicas [ replicas: 2].

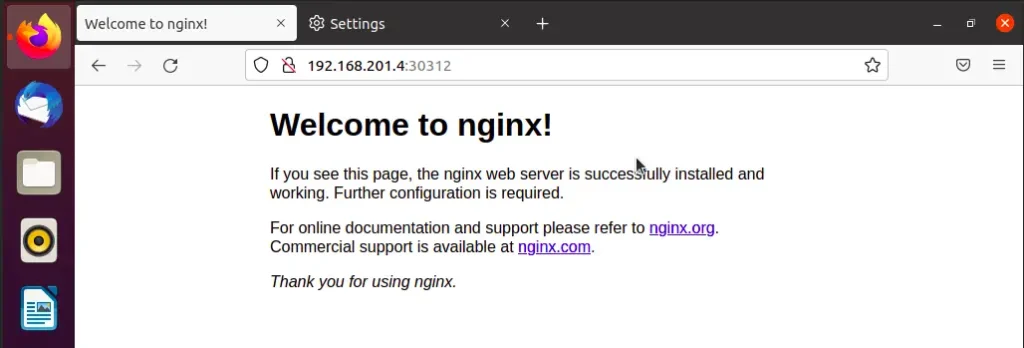

To expose our nginx deployment, run the command:

sudo k0s kubectl expose deployment nginx-server --type=NodePort --port=80Sample output:

service/nginx-server exposedThe expose command takes a replication controller, service, deployment or pod and expose it as a new Kubernetes service. For more info visit this link : https://kubernetes.io/docs/reference/kubectl/overview/

Confirm if the nginx service has been exposed by this command.

$ sudo k0s kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 31m

nginx-server NodePort 10.105.244.61 <none> 80:30312/TCP 83sThe output reveals that our nginx-server has been exposed on port 30312.

From your browser, access the service using port 30312 on your ubuntu server. This is by using the control node IP address followed by the port number.

http://control_node_ip-address:30312Note : If your connection doesn’t work, please check your proxy server settings on your browser, be sure you have allowed the http port on the firewall and nginx server service is enabled and its status running.

See the screenshot below:

How To Uninstall k0s Kubernetes Cluster

To uninstall k0s from your system, execute the commands below.

sudo k0s stop

sudo k0s resetWhere:

sudo k0s stop– This command stops the k0s service.sudo k0s reset– This command removes k0s installation.

See output below of my k0s node reset:

$ sudo k0s reset

INFO[2023-10-30 09:54:17] * containers steps

INFO[2023-10-30 09:54:23] successfully removed k0s containers!

INFO[2023-10-30 09:54:23] no config file given, using defaults

INFO[2023-10-30 09:54:23] * uninstall service step

INFO[2023-10-30 09:54:23] Uninstalling the k0s service

INFO[2023-10-30 09:54:23] * remove directories step

INFO[2023-10-30 09:54:24] * CNI leftovers cleanup step

INFO[2023-10-30 09:54:24] * kube-bridge leftovers cleanup step

INFO k0s cleanup operations done. To ensure a full reset, a node reboot is recommended.The node should go to NotReady state:

$ sudo k0s kubectl get nodes

NAME STATUS ROLES AGE VERSION

ubuntu-02 NotReady <none> 9m23s v1.31.1+k0s.0

ubuntu-01 Ready <none> 35m v1.31.1+k0s.0You can then delete node object on Kubernetes and maybe reinstall:

$ sudo k0s kubectl delete node ubuntu-02

node "ubuntu-02" deleted

$ sudo k0s kubectl get nodes

NAME STATUS ROLES AGE VERSION

ubuntu-01 Ready <none> 35m v1.31.1+k0s.0Next reading:

That marks the end of our article. The key points to remember is download & Installation of k0s in both the control node and the worker node, generation of tokens from the control nodes to authenticate the worker node, checking nodes and pods, the kubectl command usage, and exposing of deployment ports. We trust the article will help you in your journey to the Kubernetes world.

Learn more about how we can support your journey with CloudSpinx.