Ceph Storage is a free and open source software-defined, distributed storage solution designed to be massively scalable for modern data analytics, artificial intelligence(AI), machine learning (ML), data analytics and emerging mission critical workloads. In this article, we will talk about how you can create Ceph Pool with a custom number of placement groups(PGs).

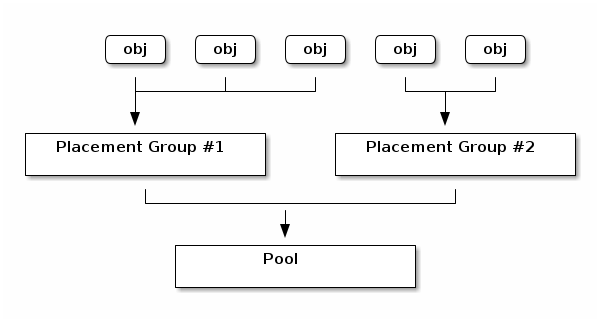

In Ceph terms, Placement groups (PGs) are shards or fragments of a logical object pool that place objects as a group into OSDs. Placement groups reduce the amount of per-object metadata when Ceph stores the data in OSDs.

A larger number of placement groups (e.g., 100 per OSD) leads to better balancing. The Ceph client will calculate which placement group an object should be in. It does this by hashing the object ID and applying an operation based on the number of PGs in the defined pool and the ID of the pool. See Mapping PGs to OSDs for details.

Calculating total number of Placement Groups.

(OSDs * 100)

Total PGs = ------------

pool sizeFor example, let’s say your cluster has 9 OSDs, and default pool size of 3. So your PGs will be.

9 * 100

Total PGs = ------------ = 300

3Create a Pool

To syntax for creating a pool is:

ceph osd pool create {pool-name} {pg-num}Where:

- {pool-name} – The name of the pool. It must be unique.

- {pg-num} – The total number of placement groups for the pool. This is optional.

I’ll create a new pool named k8s_rbd_data with and let ceph handle placement groups allocation and distribution.

$ ceph osd pool create k8s_rbd_data

pool 'k8s_rbd_data' createdTo enable automatic pg scaling run:

ceph osd pool set k8s_rbd_data pg_autoscale_mode onNow list available pools to confirm it was created.

$ ceph osd lspools

1 ceph-blockpool

2 ceph-objectstore.rgw.control

3 ceph-filesystem-metadata

4 ceph-objectstore.rgw.meta

5 ceph-filesystem-replicated

6 .mgr

7 ceph-objectstore.rgw.log

8 ceph-objectstore.rgw.buckets.index

9 ceph-objectstore.rgw.buckets.non-ec

10 ceph-objectstore.rgw.otp

11 .rgw.root

12 ceph-objectstore.rgw.buckets.data

14 k8s_rbd_dataCeph filesystem pool example

## Create Metadata Pool:

ceph osd pool create rook_cephfs_metadata 128

ceph osd pool application enable rook_cephfs_metadata cephfs

## Create Data Pool:

ceph osd pool create rook_cephfs_data 128

ceph osd pool application enable rook_cephfs_data cephfs

## Associate Pools with the Filesystem:

ceph fs new cephfilesystem rook_cephfs_metadata cephfs_dataAssociate Pool to Application

Pools need to be associated with an application before use. Pools that will be used with CephFS or pools that are automatically created by RGW are automatically associated.

### Ceph Filesystem ###

sudo ceph osd pool application enable <pool-name> cephfs

### Ceph Block Device ###

sudo ceph osd pool application enable <pool-name> rbd

### Ceph Object Gateway ###

sudo ceph osd pool application enable <pool-name> rgwExample:

$ ceph osd pool application enable k8s_rbd_data rbd

enabled application 'rbd' on pool 'k8s_rbd_data'Pools that are intended for use with RBD should be initialized using the rbd tool:

rbd pool init k8s_rbd_dataTo disable the app, use:

ceph osd pool application disable <poolname> <app> {--yes-i-really-mean-it}To obtain I/O information for a specific pool or all, execute:

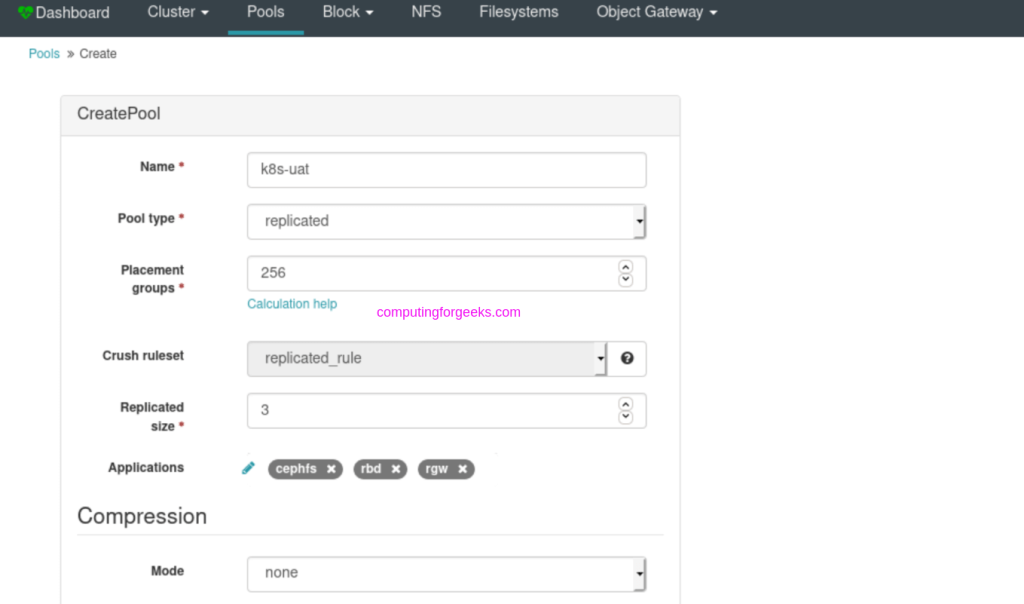

ceph osd pool stats [{pool-name}]Doing it from Ceph Dashboard

Login to your Ceph Management Dashboard and create a new Pool – Pools > Create

Deleting a Pool

To delete a pool, execute:

sudo ceph osd pool delete {pool-name} {pool-name} --yes-i-really-really-mean-itStay tuned for more Ceph related articles to follow.

More articles:

- How To Install Kubernetes Cluster on Ubuntu

- Install MicroK8s Kubernetes Cluster on Arch|Manjaro|Garuda

- Deploy Elasticsearch Cluster on Rocky Linux 8 Using Ansible