Let us see a brief about Kubernetes: Kubernetes is an open-source container orchestration engine, that makes it easier to automate the scaling, deployment, and management of containerized applications. Cloud Native Computing Foundation (CNCF) hosts a free and open-source project.

MicroK8s

For local systems, MicroK8s is a lightweight Kubernetes distribution. MicroK8s’ primary developer, Canonical, defines the platform as a “low-ops, minimal production” Kubernetes distribution. MicroK8s might be the right distribution for you if you’ve ever wanted to build up a high-availability, production-grade Kubernetes cluster without needing to install numerous machines or handle intricate configurations.

The majority of Linux varieties and any other system that can run Snap can install MicroK8s with just a single package of K8s. PCs, desktops, and Raspberry Pi are among the Internet of Things (IoT) devices that can run this. It is not advised to use this deployment for production settings; instead, it should only be used for offline development, prototyping, and testing, or as a thin, affordable, and dependable K8 for CI/CD.

Features of MicroK8s

- Fast install: In less than 60 seconds, get a complete Kubernetes system up and operating.

- Small: Using MicroK8s in your CI/CD pipelines will allow you to get on with your day without interruptions.

- Safe: Uses cutting-edge isolation to operate on your laptop without risk.

- Complete: Includes a docker registry that enables you to launch, manage, and utilize each and every container on the system.

- Updates: To update when a new major version is released, use a single command (or schedule it to update automatically).

- Rich in features: It has all the cool features you would want to try on a regular, small K8. Let them have it and move on.

- GPU Passthrough: Give MicroK8s a GPGPU so your Docker containers can make use of CUDA.

- Upstream: Your laptop receives CNCF binaries that have been updated and upgraded.

k0s

An open-source, feature-rich Kubernetes distribution called k0s comes preconfigured with every feature required to set up a Kubernetes cluster. Because of its modest system requirements, flexible deployment options, and straightforward design. k0s is well suited for:

- Any cloud

- Bare metal

- Edge and IoT

With k0s, installing and managing a CNCF-certified Kubernetes distribution is remarkably simplified. Developer friction is eliminated, and new clusters can be bootstrapped in a matter of minutes with k0s. This makes it simple for anyone to get started, even without any prior Kubernetes knowledge or experience.

Because the vulnerability issues can be readily fixed and applied directly to the K0s distribution, K0s is equally secure as regular K8s. Moreover, K0s is open source!

Key features of k0s

- Kubernetes is certified and 100% upstream.

- There are several installation methods available, including single-node, multi-node, airgap, and Docker.

- k0sctl provides automatic lifecycle management, including upgrade, backup, and restore.

- Accessible as a single binary that only requires the kernel at runtime.

- Adaptable deployment options that come standard with control plane isolation

- Supports x86-64, ARM64, and ARMv7 processors.

- Allows for customized Container Runtime Interface (CRI) plugins, with the default being containerd.

K0s has additional features that are covered on their official website.

Setup MicroK8s and k0s Kubernetes on Debian 12

This guide will walk you through on MicroK8s and k0s Kubernetes Cluster installation on Debian 12. Follow each step for a successful installation of both MicroK8s and k0s.

1. Installing MicroK8s on Debian 12

Run the command below before beginning with the installations

sudo apt update

[ -e /var/run/reboot-required ] && sudo rebootYou’re ready to go!

Install Microk8s using Snap

We shall install MicroK8s using Snap. Run the command below to install snap on Debian 12.

sudo apt install -y snapdYou can use Snap to begin MicroK8s installation after installing it. To keep up with every Kubernetes release, snaps are regularly updated.

$ sudo snap install microk8s --classic

2023-11-12T15:50:25+03:00 INFO Waiting for automatic snapd restart...

microk8s (1.27/stable) v1.27.7 from Canonical✓ installedYou can verify every published version using:

$ snap info microk8s

name: microk8s

summary: Kubernetes for workstations and appliances

publisher: Canonical✓

store-url: https://snapcraft.io/microk8s

contact: https://github.com/canonical/microk8s

license: Apache-2.0

...............................MicroK8s will begin building a single node Kubernetes cluster as soon as it is installed. You can use command below to check the deployment’s status.

$ microk8s.status

microk8s is running

high-availability: no

datastore master nodes: 127.0.0.1:19001

datastore standby nodes: none

addons:

enabled:

dns # (core) CoreDNS

ha-cluster # (core) Configure high availability on the current node

helm # (core) Helm - the package manager for Kubernetes

helm3 # (core) Helm 3 - the package manager for Kubernetes

disabled:

cert-manager # (core) Cloud native certificate management

community # (core) The community addons repository

dashboard # (core) The Kubernetes dashboard

gpu # (core) Automatic enablement of Nvidia CUDA

host-access # (core) Allow Pods connecting to Host services smoothly

hostpath-storage # (core) Storage class; allocates storage from host directory

ingress # (core) Ingress controller for external access

kube-ovn # (core) An advanced network fabric for Kubernetes

mayastor # (core) OpenEBS MayaStor

metallb # (core) Loadbalancer for your Kubernetes cluster

metrics-server # (core) K8s Metrics Server for API access to service metrics

minio # (core) MinIO object storage

observability # (core) A lightweight observability stack for logs, traces and metrics

prometheus # (core) Prometheus operator for monitoring and logging

rbac # (core) Role-Based Access Control for authorisation

registry # (core) Private image registry exposed on localhost:32000

storage # (core) Alias to hostpath-storage add-on, deprecatedTo view cluster information, use:

$ microk8s.kubectl cluster-info

Kubernetes control plane is running at https://127.0.0.1:16443

CoreDNS is running at https://127.0.0.1:16443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.Get the K8S node’s status as well.

$ microk8s.kubectl get nodes

NAME STATUS ROLES AGE VERSION

debian Ready <none> 5m16s v1.27.7You can make an alias for the microk8s.kubectl by running the following.

echo "alias kubectl='microk8s.kubectl'" >>~/.bashrc

source ~/.bashrcAfter that, you can use the kubectl command:

kubectl get nodes -o wideEnable Kubernetes Addons on MicroK8s

You can enable addons by using the microk8s.enable command.

microk8s.enable dashboard dnsSample output:

Infer repository core for addon dashboard

Infer repository core for addon dns

WARNING: Do not enable or disable multiple addons in one command.

This form of chained operations on addons will be DEPRECATED in the future.

Please, enable one addon at a time: 'microk8s enable <addon>'

Enabling Kubernetes Dashboard

Infer repository core for addon metrics-server

Enabling Metrics-Server

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrolebinding.rbac.authorization.k8s.io/microk8s-admin created

Metrics-Server is enabled

Applying manifest

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

secret/microk8s-dashboard-token created

If RBAC is not enabled access the dashboard using the token retrieved with:

microk8s kubectl describe secret -n kube-system microk8s-dashboard-token

Use this token in the https login UI of the kubernetes-dashboard service.

In an RBAC enabled setup (microk8s enable RBAC) you need to create a user with restricted

permissions as shown in:

https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md

Addon core/dns is already enabledEnable the Storage addon:

$ microk8s.enable storage

deployment.apps/hostpath-provisioner createdstorageclass.storage.k8s.io/microk8s-hostpath createdserviceaccount/microk8s-hostpath createdclusterrole.rbac.authorization.k8s.io/microk8s-hostpath createdclusterrolebinding.rbac.authorization.k8s.io/microk8s-hostpath createdStorage will be available soon.To enable the Storage and Istio addons:

microk8s enable community

microk8s.enable istioFor other plugins, the same format is applicable. Verify the enabled add-ons using:

$ microk8s.status

microk8s is running

high-availability: no

datastore master nodes: 127.0.0.1:19001

datastore standby nodes: none

addons:

enabled:

dashboard # (core) The Kubernetes dashboard

dns # (core) CoreDNS

ha-cluster # (core) Configure high availability on the current node

helm # (core) Helm - the package manager for Kubernetes

helm3 # (core) Helm 3 - the package manager for Kubernetes

hostpath-storage # (core) Storage class; allocates storage from host directory

metrics-server # (core) K8s Metrics Server for API access to service metrics

storage # (core) Alias to hostpath-storage add-on, deprecated

disabled:

cert-manager # (core) Cloud native certificate management

community # (core) The community addons repository

gpu # (core) Automatic enablement of Nvidia CUDA

host-access # (core) Allow Pods connecting to Host services smoothly

ingress # (core) Ingress controller for external access

kube-ovn # (core) An advanced network fabric for Kubernetes

mayastor # (core) OpenEBS MayaStor

metallb # (core) Loadbalancer for your Kubernetes cluster

minio # (core) MinIO object storage

observability # (core) A lightweight observability stack for logs, traces and metrics

prometheus # (core) Prometheus operator for monitoring and logging

rbac # (core) Role-Based Access Control for authorisation

registry # (core) Private image registry exposed on localhost:32000Disable Addons

To disable the addon, use the microk8s.disable command.

$ microk8s.disable istio

Disabling Istio

namespace "istio-system" deleted

Istio is terminatingDeploying Pods and Containers on MicroK8s

Deployments follow the standard Kubernetes procedure. Take a look at the following example to create a Nginx deployment using two containers.

$ microk8s.kubectl create deployment nginx --image nginx --replicas 2

deployment.apps/nginx created

$ microk8s.kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 2/2 2 2 95s

$ microk8s.kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-77b4fdf86c-kt26p 1/1 Running 0 2m44s

nginx-77b4fdf86c-zbmxl 1/1 Running 0 2m44sExpose service:

$ microk8s.kubectl expose deployment nginx --port 80 --target-port 80 --type ClusterIP --selector=run=nginx --name nginx

service/nginx exposedTo delete Deployment:

$ microk8s.kubectl delete deployment nginx

deployment.extensions "nginx" deleted

$ microk8s.kubectl delete service nginx

service "nginx" deletedStopping and Restarting MicroK8s

MicroK8s can be easily shut down when not in use without having to uninstall it.

$ microk8s.stop

Stopped.Start MicroK8s by run:

$ microk8s.start

Started.Removing MicroK8s

Stop all running pods first if you want to completely remove MicroK8s.

microk8s.resetNext remove MicroK8s snap.

snap remove microk8s2. Install k0s on Debian 12

This section’s steps are organized to guarantee that there are no setup errors. Simply follow the instructions, and you ought to have a working Kubernetes cluster by the end.

Download k0s binary

To configure every feature you’ll need to build a Kubernetes cluster, k0s comes packaged as a single binary. This guarantees usability even for non-Linux knowledgeable users. Download the binary by running:

sudo apt install curl

sudo curl -sSLf https://get.k0s.sh | sudo shThis below command will verify that the path /usr/local/bin is a binary path.

echo "export PATH=\$PATH:/usr/local/bin" | sudo tee -a /etc/profile

source /etc/profileThe path should be declared as binary when you echo the PATH.

$ echo $PATH

/usr/local/bin:/usr/bin:/bin:/usr/local/games:/usr/games:/usr/local/binBootstraping Single Kubernetes Controller using k0s

Use the following command to install a single node k0s with the controller and worker functions configured to default.

sudo k0s install controller --enable-workerThe Controller service should then be started after a short wait:

sleep 30 && sudo k0s startWe’ll also enable the k0s controller systemd service:

sudo systemctl enable k0scontrollerVerify whether the controller’s components are now operational:

$ sudo k0s status

Version: v1.28.3+k0s.0

Process ID: 8968

Role: controller

Workloads: true

SingleNode: false

Kube-api probing successful: trueAlso, see the status of the k0s systemd service:

$ sudo systemctl status k0scontroller

● k0scontroller.service - k0s - Zero Friction Kubernetes

Loaded: loaded (/etc/systemd/system/k0scontroller.service; enabled; preset: enabled)

Active: active (running) since Fri 2023-11-10 16:03:10 EAT; 1min 3s ago

Docs: https://docs.k0sproject.io

Main PID: 8968 (k0s)

Tasks: 132

Memory: 1.2G

CPU: 27.751s

CGroup: /system.slice/k0scontroller.service

├─ 8968 /usr/local/bin/k0s controller --enable-worker=true

├─ 8977 /var/lib/k0s/bin/etcd --log-level=info --listen-client-urls=https://127.0.0.1:2379 --peer-key-file=/var/lib/k0s/pki/etcd/peer.key --name=debian --peer-cert-file=/var/lib/k0s/pki/etcd/peer.c>

├─ 8990 /var/lib/k0s/bin/kube-apiserver --secure-port=6443 --client-ca-file=/var/lib/k0s/pki/ca.crt --tls-cert-file=/var/lib/k0s/pki/server.crt --service-account-signing-key-file=/var/lib/k0s/pki/s>

├─ 9024 /usr/local/bin/k0s api --data-dir=/var/lib/k0sConfigure kubectl access to k0s Kubernetes Cluster

Run the kubectl command to gain access to your cluster.

sudo k0s kubectl <command>Following a successful deployment, k0s generates a KUBECONFIG file for you to use, however doing so requires sudo rights. It is impossible to execute this embedded binary without first calling the k0s binary:

$ sudo k0s kubectl get nodes

NAME STATUS ROLES AGE VERSION

debian01 Ready control-plane 33h v1.28.3+k0sLet us move the kubectl configuration file to the home directory of our user.

mkdir ~/.kube

sudo cp /var/lib/k0s/pki/admin.conf ~/.kube/config

sudo chown $USER:$USER ~/.kube/configYou can monitor the status of your nodes or deploy your application using kubectl. Let’s install kubectl for all users.

### Linux ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

### macOS Intel ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/amd64/kubectl"

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectl

sudo chown root: /usr/local/bin/kubectl

### macOS Apple Silicon ###

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/darwin/arm64/kubectl"

chmod +x ./kubectl

sudo mv ./kubectl /usr/local/bin/kubectl

sudo chown root: /usr/local/bin/kubectlFor instance:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

debian01 Ready control-plane 2d2h v1.28.3+k0sWe are able to verify the state of Pods across all namespaces:

$ kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-85df575cdb-fxvl2 1/1 Running 0 13d

kube-system konnectivity-agent-h7jpj 1/1 Running 0 13d

kube-system kube-proxy-2jjg9 1/1 Running 0 13d

kube-system kube-router-9xwbz 1/1 Running 0 13d

kube-system metrics-server-7556957bb7-tn5cj 1/1 Running 0 13dCreate k0s Kubernetes join token for Worker

Worker clustering is facilitated by token. The k0s controller and a worker can exchange information through tokens. Together with joining the cluster as a worker, the token also enables the node.

To create the token needed when adding new worker nodes to the cluster, run the following command on the first Control plane node:

sudo k0s token create --role=workerYour screen should display a lengthy token string. This token will be used to connect worker nodes to the Kubernetes cluster:

H4sIAAAAAAAC/2yV3Y7qOhKF7/speIHex/lDB6S5mIANOxBz7Lhs4rskzu6QOCFAgHRG8+6jZvdIM9K5K1eVvmVZ1lpZf5Ll9XY6d8vZw3kr7P02lNfb8u199l0v32az2awor8Pp16nIhvI9uw/V+XoaPt9NNmTL2T5Bwz5xVhxMJE7hmssIEtARQwT4a4aGVeNECfCQY7pmSvYaES+BKNTISthUp1yZOyfnkXfSU7a/xyK6yEZ36lit01oSgzhX0O9zp4+yra5FQziH545ZEhpkMJeGMku2TBDJgfgCgip2dK9stTOyT6Ed61KQi5I81KC9eGtp2UhfSB6W2LlIwSnHUcW74ll+9L9nwOv/nQmIuhLrC5NypVGgWEOoxPxo1kTnUgdp66iY9Kk4ch030tOuIRk4YYzNGjyyXVkapTXFAHLFJJEMMEogwgaR126CyOPrTQC4lK0Vqahq7tA9XdNdvNH7/RSG6rRIWUchtfAJgh5Ki3dMxU/W2H3WDgfd6T5veQMKPVMVeKkXohjrTOPxbFAwF1DdkuTmyE20yTrDdh55pqo/xsrwvCNZqn4+y6bvctY/aMuzQlRP1ThZeQxvZsN5XBdPuo12FIqH/FxYszYcYKT51uhyYwO+1RfW0CE52mcCthI1H1VDeamCY44W/qrthdpWwCwZY6WlqqNOr81kwA45qYYMD27q9PMCk0QJ+YixPieWDtmWPvefi/NhG+1yUZ3VJlAHAp9MOXNBil0u6SVXgUigv0t7DlJUPNLGaUVrV3ptJzFRzjzs71G0V3V4ytzRalLN966e4qPEtLVb1vFUbRbadHTO3cVYfvR1McmTbCuS1dU6nxo/VfZM1fBX4YQeQDA3G3oALK+AKp+10klrwqRLKVh9gqlxtRNhpgxlDQm52/OsicJV44QUmzCWsuFbQ9hp4QHI0CC7Ym0fAo46YfVPaJwVU6nPIQIGPAT4eAj4+eQIj1JxrB1yZE2UUHQLMuTv9u7TB1kEaa3PsBokO2qVtnJSDUnLhhxYa66Fqq4ZFIGZwq8/OPGGhALR8EufOXKSTih3E3f0NuQSyZiy3i1b5hhX3mAiAU8Gn6MA09WC8k3fghu5yZZO0iU3IwuUClPlyYLpYzQUU5RRPCQMilFt+0dmowQmyjgs8KrtPe4GB9PwALY0SEX6zBWdWBtd4jaa514cCJf6yenmJcSO1B2rxP7TTTryyWBMExF2TEYZIOkewOBiHfk7VOxgkuQAtD6IKi6wo4SCa6yC+QEMytahZMJewHUOgoCT1umDTfxnLCMCHYeda4GvjScVDantcVaTC6AFjp/9Z+bxu3D9gNdmJ23VJ8Q8chztBV6c42P12LnGV8fwGKu+5SLSAt3GhMix3EjKJukfgM9hbcZiHUbQai0JjVYNfrA6nrj60ythhNj2MevIvRDW37lBbRR6KEXPYrLeYcPbdPp46BoesJF3aKjMNguXQeFmbtQqCIKig2vS4R11GyetK/6XQLtvbyaiYR8MyURiu0mAhhLDy5f37PyPl8HfyuujvC5n1TD0t+UffzgL94cz//OHi9APx3GWc9/33mazLmvL5axBt7fi3A3lOPyOit/1d1R858Zr66txv71O97y05fCen8/Dbbhm/f/T7tdr2Q3v/yW9ms2pM8vZ6tz9On289dfyV3ktu6K8LWf/+vfbF/Ul/g35G/xL+HWF4dyU3XLmek729H/Mr8hvJvMoref788/q7T8BAAD//9nAdvsGBwAABut this isn’t a particularly safe method of doing it. You can use this command to set the token’s expiration time in order to increase security:

sudo k0s token create --role=worker --expiry=100h>k0s-worker-token.jsonAdd workers to the k0s Kubernetes cluster

Installing the k0s binary will be the first step before connecting one or more worker nodes to our Kubernetes cluster.

Prepare new worker nodes

These actions are carried out on the target worker nodes that are going to be added to the cluster, not on the control node. To download and install the k0s binary file, follow the instructions below.

sudo curl -sSLf https://get.k0s.sh | sudo shNow, export the binary PATH.

echo "export PATH=\$PATH:/usr/local/bin" | sudo tee -a /etc/profile

source /etc/profileNow that the worker node successfully set up k0s, we must authenticate the worker node on the control node.

Created token should be saved to a file:

$ vim k0s-worker-token.json

#Paste and save your join token hereGive this command on the worker node to add it to the control node.

sudo k0s install worker --token-file k0s-worker-token.jsonThe token created from the control node in the preceding step is contained in the file token k0s-worker-token.json.

Now start k0s service:

sleep 30 && sudo k0s startVerify the k0s service status on the worker node that is being added to the cluster:

$ sudo k0s status

Version: v1.28.3+k0s.0

Process ID: 4216

Role: worker

Workloads: true

SingleNode: false

Kube-api probing successful: trueCheck new nodes in control plane node

After a while, you should have an output similar to the one below on analyzing the nodes.

$ sudo k0s kubectl get nodes

NAME STATUS ROLES AGE VERSION

debian01 Ready control-plane 13d v1.28.3+k0s

debian02 Ready <none> 28s v1.28.3+k0sAdd the -o wide option to get more information about each node:

$ sudo k0s kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

debian01 Ready control-plane 13d v1.28.3+k0s 192.168.200.111 <none> Debian GNU/Linux 12 (bookworm) 6.1.0-13-amd64 containerd://1.7.8

debian02 Ready <none> 44s v1.28.3+k0s 192.168.200.173 <none> Debian GNU/Linux 12 (bookworm) 6.1.0-9-amd64 containerd://1.7.8My Debian02 node has been added to the cluster successfully, based on the output.

Deploy test application on k0s Kubernetes Cluster

We’ll use a Nginx server to test our deployment. Create a file named nginx-deployment.yaml. Simply copy and paste the content below on your terminal.

cat <<EOF > nginx-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-server

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

type: NodePort

selector:

app: nginx

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30000

EOFThe Service and Deployment specifications are contained in the YAML file above. It generates the nginx-server deployment and the service, which is nginx-service.

Now, configure the new service and deployment:

$ sudo k0s kubectl apply -f nginx-deployment.yaml

deployment.apps/nginx-server created

service/nginx-service createdCheck pod status after that.

$ sudo k0s kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-server-7c79c4bf97-8pxcx 1/1 Running 0 31s

nginx-server-7c79c4bf97-f6l28 1/1 Running 0 31sAs can be seen from the output, two nginx pods are operating as per the configuration file mentioned above, where two replicas were defined [replicas: 2].

Verify whether this command has exposed the nginx service.

$ sudo k0s kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 13d

nginx-service NodePort 10.107.181.239 <none> 80:30000/TCP 55sUtilizing NodePort, the service is accessible on port, 30000. We can use the worker-node IP and port to access the application through the browser.

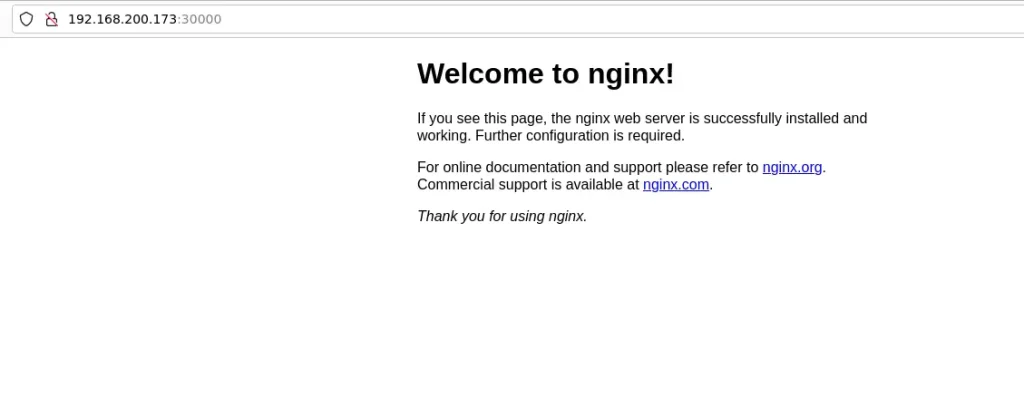

Enter http://worker-node-ip:30000 and you’ll be taken to the welcome page of nginx below.

Uninstalling k0s Kubernetes Cluster

In order to remove K0s, you must first stop the K0s service and then remove the installation from each node.

sudo k0s stopRemove k0s setup.

sudo k0s resetConclusion

We have concluded on MicroK8s and k0s Kubernetes Cluster installation on Debian. K0s is the best choice since it is highly customized Kubernetes distribution. Also, it is speedy and feature-rich setup given priority, particularly for local development and testing. For convenience, MicroK8s might be a better fit. Your unique use case and requirements will ultimately determine which option is best. I hope this guide was so informative.